|

As my customers continue to embrace hybrid cloud environments, the need for efficient and flexible cloud management solutions becomes more critical. VMware Cloud Foundation (VCF) 5.2 introduces several enhancements designed to address these needs, focusing on improving lifecycle management, scalability, security, and flexibility. Let's dive into the key features and updates in VCF 5.2 and see how they can benefit your cloud strategy. Seamlessly Transition to Cloud FoundationOne of the standout features of VCF 5.2 is the ability to import existing vSphere infrastructure into Cloud Foundation. This capability extends the SDDC Manager's inventory and lifecycle management to your current infrastructure, making the transition smoother and less disruptive. There are two primary use cases:

Flexible Edge Architectures for Diverse NeedsVCF 5.2 offers a range of edge architecture options to cater to various deployment scenarios:

Enhanced Lifecycle ManagementLifecycle management is at the core of VCF 5.2, with significant improvements to streamline operations:

vSphere Live PatchingVCF 5.2 introduces a new category of ESXi patches focused on security fixes, eliminating the need for host reboots. This innovation uses Partial Maintenance Mode to avoid VM evacuation and maintenance mode requirements, significantly reducing downtime and administrative effort. Robust Security and ComplianceSecurity enhancements in VCF 5.2 include:

Hands-On Learning and SupportTo help customers get the most out of VCF 5.2, VMware offers a variety of hands-on platforms:

Comprehensive ResourcesVMware ensures that you have all the resources you need to maximize the benefits of VCF 5.2. From detailed documentation and demo pages to blogs and community forums, there's a wealth of information to support your journey. VMware Cloud Foundation 5.2 represents a significant step forward in hybrid cloud management. With its enhanced lifecycle management, flexible edge architectures, robust security features, and comprehensive support resources, VCF 5.2 is designed to help organizations streamline operations, improve security, and scale efficiently. Whether you're transitioning existing infrastructure or deploying new environments, VCF 5.2 provides the tools and capabilities to meet your cloud management needs.

For more information, visit the VMware Cloud Foundation page and explore the latest documentation.

0 Comments

In the current landscape shaped by Broadcom's influence on VMware's trajectory, organizations considering staying with VMware might find it prudent to explore transitioning to a hybrid cloud setup. Opting for the right infrastructure becomes paramount to ensure optimal performance and scalability. Among the offerings in the revamped portfolio, VMware Cloud Foundation (VCF) emerges as a favored option, thanks to its robust software-defined data center (SDDC) capabilities. Amid Broadcom's streamlined portfolio, featuring VMware vSphere Foundation and VMware Cloud Foundation, loyal VMware customers have a compelling incentive to opt for a dedicated solution. Combining VCF with Dell VxRail presents an attractive proposition. Not only is VxRail custom-built for VCF, but it also offers the flexibility to integrate third-party storage alongside VMware vSAN. This is important for customers who have investments that are already made in existing external storage systems or have a use case in which external storage systems are required. This combination sets itself apart with its seamless integration, streamlined management, and enhanced performance. Consequently, deploying VMware Cloud Foundation on Dell VxRail emerges as the prime selection. Tailored Integration and OptimizationDell VxRail is specifically designed with VMware environments in mind, which makes it an ideal platform for VMware Cloud Foundation. The integration goes beyond general compatibility; Dell VxRail comes pre-configured with VMware vSAN and is fully optimized for VMware environments. This deep integration allows businesses to leverage a hyper-converged infrastructure that simplifies and streamlines deployment, management, and scaling of VMware-based applications. Simplified Deployment and ManagementOne of the standout features of running VCF on VxRail is the simplified deployment process. VxRail Manager, along with VMware SDDC Manager, provides a unified management experience that automates the deployment and configuration of VMware components. This integration reduces the complexity typically associated with setting up a hybrid cloud environment, enabling IT teams to focus more on strategic operations rather than routine setups. Scalability and FlexibilityScalability is a critical consideration for organizations aiming to thrive in a dynamic market. VxRail presents a scalable architecture that evolves alongside your business requirements. Whether expanding vertically or horizontally, VxRail offers the flexibility to incorporate additional nodes or integrate new technologies seamlessly, preserving ongoing operations. This adaptability empowers organizations to extend their VMware Cloud Foundation environment in a cost-effective and efficient manner. Additionally, as part of scalability, customers can seamlessly extend to hybrid cloud offerings by VMware such as VMware Cloud on AWS or the Azure VMware Solution, further enhancing flexibility and agility. Enhanced Performance and ReliabilityRunning VCF on Dell VxRail brings the advantage of optimized performance. VxRail nodes are equipped with high-performance processors and memory options, tailored for data-intensive applications that are typical in VMware environments. Moreover, Dell’s proactive support and single-point-of-contact service significantly enhance the reliability of the infrastructure, ensuring high availability and minimal downtime. Streamlined Support and ServicesChoosing Dell VxRail for running VMware Cloud Foundation simplifies the support process. Since Dell and VMware are closely partnered, VxRail comes with integrated support for both hardware and software components. This means quicker resolution of issues and less downtime, which is crucial for maintaining continuous business operations. The cohesive support structure is a significant advantage for IT departments that manage complex environments. Final ThoughtsFor businesses leveraging VMware Cloud Foundation, Dell VxRail serves as a synergistic platform that enhances VCF's capabilities through superior integration, simplified management, scalability, optimized performance, and streamlined support. This amalgamation not only boosts the efficiency of VMware deployments but also provides a resilient infrastructure supporting business growth and technological advancement. Moreover, with Broadcom's streamlined portfolio featuring VCF, customers committed to VMware environments find compelling reasons to opt for this integration. Dell VxRail's compatibility with third-party storage solutions alongside VMware vSAN offers unparalleled flexibility, making it an ideal choice for enterprises seeking to optimize their hybrid cloud strategy while retaining the ability to tailor their storage requirements. Alongside these benefits, incorporating cloud offerings such as VMware Cloud on AWS or the Azure VMware Solution enhances scalability and agility, enabling organizations to seamlessly extend their VMware environment to the cloud. For organizations seeking to maximize their VMware infrastructure, running VMware Cloud Foundation on Dell VxRail presents a strategic and operationally advantageous choice, offering substantial benefits for both current and future needs. ReferencesAs a seasoned professional deeply entrenched in the world of virtualization, I've witnessed firsthand the recent shifts that have sent shockwaves through the industry. From the unprecedented Broadcom licensing changes to the evolving dynamics of cloud migration, the landscape is evolving at a rapid pace, leaving many businesses scrambling to adapt. But amidst this uncertainty, one thing remains clear: a knee-jerk reaction to switch hypervisors isn't the silver bullet solution. Instead, what's needed is a comprehensive hybrid cloud strategy that not only addresses immediate challenges but also sets the stage for long-term success. Let's dissect this further.The recent Broadcom licensing changes have left many customers reeling from unexpected cost increases. While the allure of public cloud services may seem enticing, the reality is that self-managed infrastructure often proves to be more cost-effective, especially for those with existing resources and expertise. This is where a well-crafted hybrid cloud strategy becomes invaluable. Rather than simply swapping out hypervisors in pursuit of short-term savings, I advocate for a more holistic approach. Let's delve into a refined approach that considers your unique needs and goals.

"The Cloud Management Platform (CMP) approach is a versatile solution."It's crucial to collaborate with a reliable solutions provider who comprehends the complexities of the cloud terrain and can assist you in developing a customized cloud strategy tailored to your business goals. Whether you're contemplating a multi-cloud strategy, integrating cloud-native tools, investigating hybrid cloud management platforms, or utilizing CMPs, their team of professionals should adeptly lead you through the process.

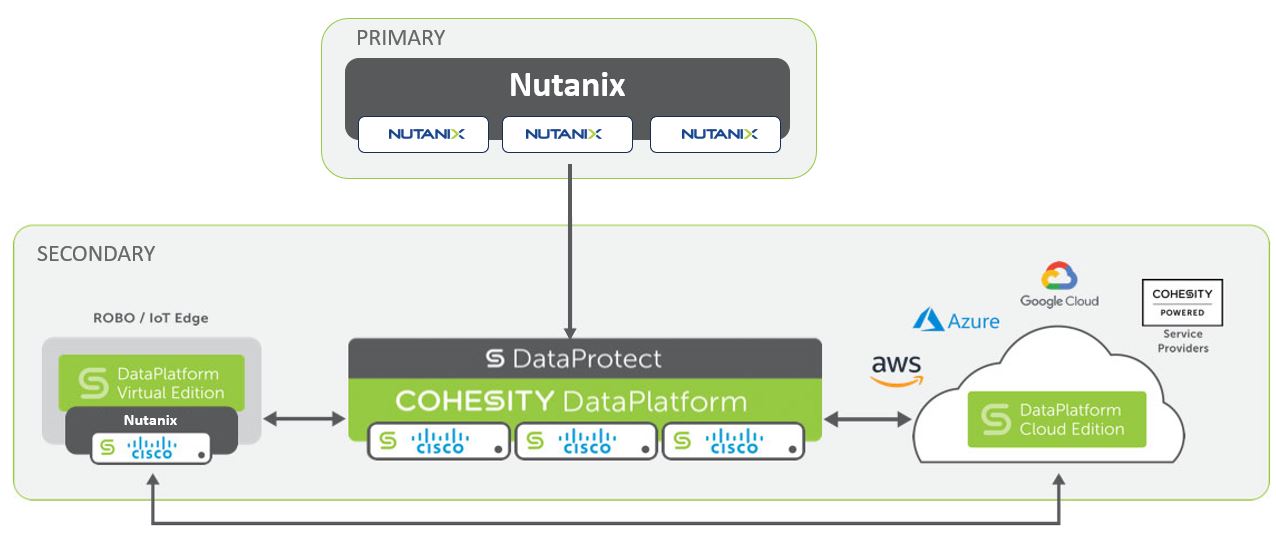

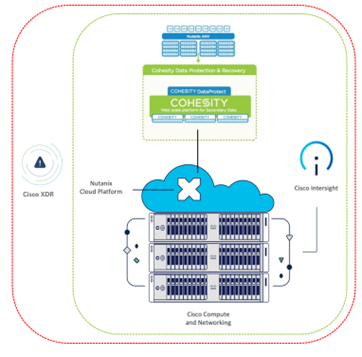

In this blog, I aim to delve into the significance of collaboration. With increasing demand from customers for streamlined data center solutions to aid in their modernization efforts, whether transitioning from the cloud, seeking alternatives to VMware, or incorporating a comprehensive range of technologies spanning network, compute, storage, backup, and security, the convergence of these cutting-edge technologies is redefining the terrain. This convergence provides businesses with unparalleled capabilities to navigate the intricacies of digital transformation. Leading this revolution is the collaborative alliances between Nutanix, Cisco, and Cohesity. They offer a comprehensive approach to data infrastructure that seamlessly merges innovation with technical expertise. In 2023, Cisco revealed its global strategic alliance with Nutanix, fostering collaboration and a shared vision: to furnish businesses with seamless, agile, and scalable solutions adept at addressing the swiftly evolving demands of the digital era. This development marked a significant industry transformation, including the discontinuation of Hyperflex in favor of Nutanix. Central to this three-pronged solution is Nutanix's hyper-converged infrastructure (HCI), which serves as a strong modern IT architecture. Nutanix's HCI platform unifies compute, storage, and networking into a single, software-defined solution, eliminating the need for traditional siloed infrastructure and simplifying management complexities. By leveraging Nutanix's distributed architecture and built-in resiliency features, organizations can achieve unparalleled scalability, agility, and performance across their entire infrastructure footprint including public cloud. The partnership extends to data protection and management through integration with Cohesity, a leading provider of modern data management solutions. Cohesity's hyper-converged secondary storage platform consolidates data management functions such as backup, recovery, replication, and archival onto a single, web-scale platform, simplifying operations and reducing infrastructure sprawl. By seamlessly integrating with Nutanix's HCI and Cisco's networking infrastructure, Cohesity enables organizations to achieve near-instantaneous backup and recovery times, ensuring business continuity and data resiliency in the face of disruptions. Complementing Nutanix's HCI prowess is Cisco's formidable networking and security expertise. Through seamless integration with Cisco's XDR (Extended Detection and Response) capabilities, organizations can fortify their defenses against a myriad of cyber threats, ranging from ransomware attacks to advanced persistent threats (APTs). Cisco's XDR platform leverages advanced analytics, machine learning, and automation to detect, investigate, and remediate security incidents in real time, ensuring comprehensive protection across the entire Nutanix environment. The further integration between Cisco XDR and Cohesity brings together the strengths of both platforms, creating a unified approach to cybersecurity and data management. Here's how this integration benefits organizations:

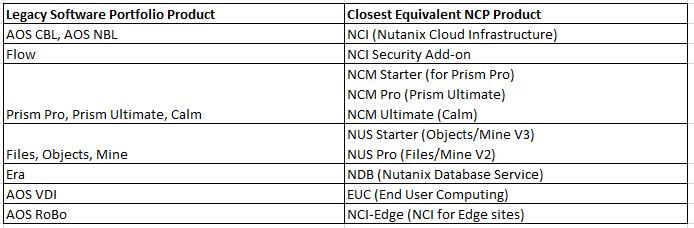

The integration between Cisco XDR and Cohesity offers organizations a powerful solution for cybersecurity and data management. By combining threat detection, incident response, and data protection capabilities, this integration helps organizations better defend against cyber threats and safeguard their critical data assets. From a technical standpoint, the integration between Nutanix, Cisco, and Cohesity is underpinned by a shared commitment to interoperability, performance optimization, and ease of deployment. Leveraging open APIs and standardized protocols, the three partners have collaborated extensively to ensure seamless integration and compatibility across their respective platforms. This collaborative approach extends to joint engineering efforts, with dedicated teams working to optimize performance, scalability, and reliability across the entire solution stack. Moreover, the partnership offers a unified management experience through Nutanix Prism, providing administrators with a single-pane-of-glass interface to monitor, manage, and orchestrate their entire infrastructure environment. Nutanix Prism seamlessly integrates with Cisco Intersight, enabling organizations to leverage Cisco's cloud-based management platform for streamlined operations and enhanced visibility across distributed environments. The convergence of Nutanix, Cisco, and Cohesity represents a paradigm shift in the realm of data management, offering organizations a comprehensive, future-proof solution to navigate the complexities of modern IT infrastructure. By combining Nutanix's HCI innovation with Cisco's networking and security prowess, and Cohesity's data protection expertise, businesses can unlock new levels of agility, efficiency, and resilience in their journey towards digital transformation. As organizations continue to embrace the challenges and opportunities of the digital age, the Nutanix-Cisco-Cohesity partnership stands ready to empower them with the tools and technologies they need to thrive in an increasingly interconnected world. References:In the dynamic realm of technology, software products continuously undergo upgrades, transitions, and eventual retirements. This pattern is evident with Nutanix, a prominent provider of cloud software and hyper-converged infrastructure solutions. Their recent announcement concerning the end-of-sale for legacy software products signals a significant transition for Nutanix users, emphasizing the importance of understanding the implications and planning for the shift to the new Nutanix Cloud Platform. Let's delve into the details of this announcement. Understanding the Announcement:As of February 1st, 2024, Nutanix has officially declared the end-of-sale for its legacy software products, including AOS, Flow, Prism, Calm, Files, Objects, Mine, and Era. This announcement marks the beginning of a transition period for Nutanix customers. The end-of-sale date is set for July 31st, 2024, which means that after this date, these legacy products will no longer be available for purchase. Impact on Customers:For existing Nutanix customers with active software subscriptions, there's a grace period until January 31st, 2025, to renew their subscriptions for the legacy products. However, after this date, renewals will only be possible with equivalent products from the new Nutanix Cloud Platform portfolio. This transition offers an opportunity for users to explore the enhanced features and benefits of the new platform while ensuring uninterrupted service for their current workloads. Options for Customers:Nutanix is providing customers with flexibility during this transition period. Customers can choose to renew their legacy software subscriptions before the end-of-renewal date or opt for equivalent products from the new portfolio. Additionally, Nutanix offers the option to convert legacy software subscriptions to new portfolio subscriptions before the expiration of the legacy subscription term. This allows users to seamlessly transition to the new platform without disruption. Renewals and Conversions:What are the options for renewing a legacy software portfolio subscription?

Equivalent ProductsThe table below lists the closest equivalent products. Make sure to take the training on NCP if you have more questions. Planning for the Future:For Nutanix customers, planning for the future involves understanding the implications of the end-of-sale announcement and taking appropriate action. It's essential to assess current software needs, evaluate the features and benefits of the new Nutanix Cloud Platform, and engage with the Nutanix account team or qualified partner for further guidance and support. By proactively planning for the transition, customers can ensure continuity of operations and leverage the latest innovations offered by Nutanix. The end-of-sale announcement for Nutanix legacy software products signifies a strategic shift towards the new Nutanix Cloud Platform. While this transition may present challenges, it also offers opportunities for users to modernize their infrastructure and optimize their cloud operations. By understanding the milestones, exploring available options, and engaging with Nutanix resources from strong partners, customers can navigate this transition effectively and unlock the full potential of the Nutanix ecosystem.

Unlocking the Power of Nutanix Cloud Clusters (NC2): Sizing and Deployment on Azure and AWS10/18/2023 Navigating the dynamic landscape of cloud computing requires solutions that offer flexibility, simplicity, and cost-efficiency. Nutanix Cloud Clusters (NC2) emerges as a beacon of innovation in the hybrid multi-cloud realm, providing a comprehensive platform that caters to diverse cloud deployment needs. This extensive guide dives deep into the world of NC2, exploring its intricate details, highlighting its advantages, and illuminating its deployment intricacies on Azure and AWS. Whether you are planning a migration to the cloud or optimizing your current cloud infrastructure, NC2 is a force to reckon with. Demystifying Nutanix NC2Nutanix Cloud Clusters (NC2) stands as a testament to the evolution of cloud technology, bringing together the prowess of Nutanix's hyper-converged infrastructure (HCI) stack – comprising Nutanix AOS, AHV, and Prism – into the public cloud arena. It accomplishes this feat by running on bare metal instances, facilitating seamless application migration from on-premises environments to renowned public cloud providers like Azure and AWS. The Multifaceted Benefits of NC2

Exploring NC2's Use CasesThe versatility of NC2 makes it an invaluable asset for organizations. Here are some practical use cases:

Comparative Analysis: NC2 vs. VMware OfferingWhile Nutanix Cloud Clusters (NC2), VMware on AWS, and VMware Cloud on Azure all offer hybrid cloud solutions for running VMware workloads, each presents distinct characteristics:

Nutanix Cloud Clusters (NC2):

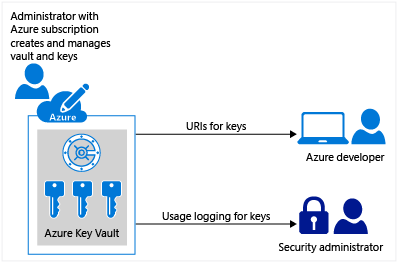

My customers are progressively embracing hybrid and multi-cloud environments to harness the advantages of a range of cloud platforms, including Microsoft Azure, AWS, and Google Cloud. While these configurations deliver unparalleled flexibility and scalability, they also bring about intricate cybersecurity hurdles. Within this blog, I will delve into the most effective strategies for fortifying hybrid and multi-cloud setups, with a particular emphasis on Microsoft Azure, to protect sensitive data and uphold a resilient cybersecurity stance. Best Practices for Securing Hybrid/Multi-Cloud Environments with Microsoft Azure:1. Implement Strong Identity and Access Management (IAM): To ensure authorized access and prevent data breaches, utilize Azure Active Directory (Azure AD) for centralized identity management. Enforce multi-factor authentication (MFA) and role-based access control (RBAC) to maintain fine-grained permissions. Regularly review and update user access privileges to mitigate the risk of unauthorized access. 2. Encrypt Data in Transit and at Rest: Protecting data is paramount. Utilize SSL/TLS for data transmission between services and implement Azure Key Vault for secure key management. Employ Azure Disk Encryption to safeguard data at rest within virtual machines and Azure Storage, providing an additional layer of protection. Azure Key VaultAzure Key Vault is a cloud service that provides a secure store for secrets. You can securely store keys, passwords, certificates, and other secrets. Azure key vaults may be created and managed through the Azure portal. Azure Key Vault offers the following features:

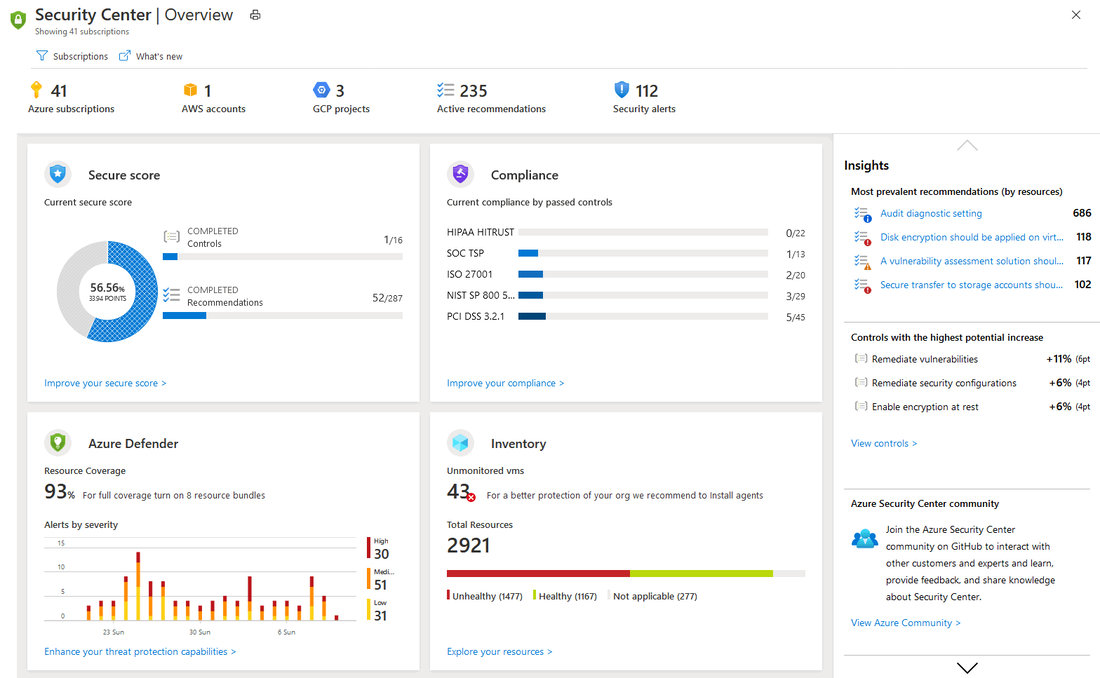

3. Network Security and Segmentation: Establish a secure network environment using Network Security Groups (NSGs) and Azure Firewall to control traffic flow between virtual networks and subnets. Leverage Virtual Private Networks (VPNs) or ExpressRoute for encrypted and private connections between on-premises and Azure resources, mitigating the risk of data interception. 4. Continuous Monitoring and Threat Detection: Stay vigilant by enabling Azure Security Center to monitor and detect threats across your Azure resources. Leverage Azure Monitor, Azure Log Analytics, and Azure Network Watcher for real-time monitoring and rapid incident response, ensuring timely action against potential security breaches. Azure Security CenterAs the cloud computing landscape persistently advances, businesses are required to maintain flexibility to keep pace. A growing number of my clients are finding it necessary to administer and operate within multi-cloud environments. Within this context, Nutanix Cloud Clusters (NC2) on Microsoft Azure present an effective solution for hybrid cloud infrastructures, facilitating businesses to streamline operations and maximize resources. In this blog, I will delve into the fundamental features and advantages of Nutanix Cloud Clusters on Microsoft Azure and examine how it can transform your corporate IT strategy. Furthermore, I will compare this with the Azure VMware Solution (AVS) and discuss how to get started on your journey with Nutanix Cloud Clusters. What is Nutanix Cloud Clusters (NC2) on Microsoft Azure?Nutanix Cloud Clusters on Microsoft Azure, or NC2, is a solution co-developed that facilitates seamless integration and management of private, public, and distributed cloud environments. It offers a unified infrastructure that allows businesses to move workloads between on-premises Nutanix clusters and Azure as per their convenience and requirements, providing true hybridity and mobility. Key Features of Nutanix Cloud Clusters on Microsoft Azure

As most of you know, www.vmwarevelocity.com has been my blog on the internet for many years now, a place where I've gathered to discuss, learn, and explore everything related to VMware virtualization. I am deeply grateful for your engagement and feedback that has spurred us all toward greater understanding.

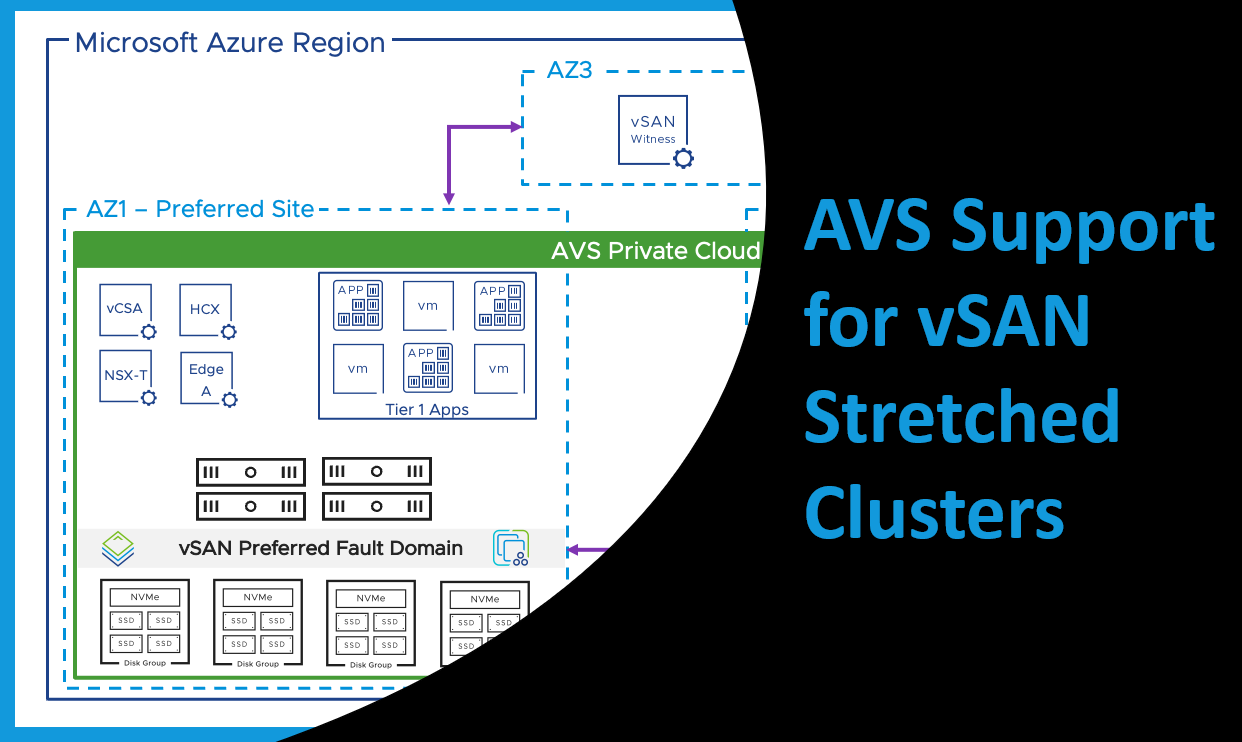

Today, I'm thrilled to announce a major evolution in my journey. I am transitioning from www.vmwarevelocity.com to a new and more comprehensive blog: www.virtualizationvelocity.com. Why the move, you may ask? While VMware has been and will continue to be, an integral part of the conversation, I've come to a point in my virtualization adventure where limiting myself to one technology doesn't quite capture the entire picture. The world of virtualization is vast and continually growing. More players have entered the scene, bringing innovative solutions and technologies that are shaping the future of virtualization. That's where www.virtualizationvelocity.com comes in. The new blog is designed to embrace this broader perspective of virtualization. Alongside VMware, I will be diving into a multitude of other cutting-edge virtualization technologies, including Nutanix, Azure, Google Cloud, and Amazon Web Services (AWS). This expansion allows me to keep pace with the rapid advancements in the field and provides you with a more comprehensive resource for all things virtualization. Does this mean I am leaving VMware behind? Absolutely not. I'll continue delving into VMware topics, updating you on the latest advancements, how-tos, and best practices. However, with the transition to www.virtualizationvelocity.com, I'll also be taking a deeper look into other platforms and exploring how they compare and contrast with VMware. I aim to provide a more rounded view of the virtualization landscape. I'm incredibly excited about this new chapter in our shared journey. This transition is about growing together, expanding our knowledge base, and becoming more versatile in understanding virtualization technologies. Thank you for your continued support and engagement. I invite you to join me at www.virtualizationvelocity.com to kick off this exciting new phase of our virtualization exploration. Here's to a wider perspective, fresh insights, and new learning opportunities! Stay tuned, and keep up with the velocity! As businesses increasingly lean on technology, the need for continuous availability of critical applications and data has become vital. The interruption of IT operations can cause substantial financial and reputational losses to an organization. Therefore, implementing a robust business continuity plan that ensures uninterrupted operation of critical applications and data, even during a disaster, is imperative. One of the key technologies that support this requirement is VMware vSAN Stretched Clusters, which provide high availability and protection for mission-critical applications and data. Recently, AVS (Azure VMware Solution) support for vSAN stretched clusters has been made generally available in several Azure regions, including West Europe, UK South, Germany West Central, and Australia East. The Power of Stretched ClustersA stretched cluster is configured by deploying an AVS private cloud across two availability zones (AZs) within the same region, with a vSAN witness placed in a third AZ. This witness constantly monitors all hosts within the cluster, serving as a quorum in the event of a split-brain scenario. With an even deployment of hosts within the private cloud across regions, the whole system operates as a single entity. Leveraging storage policy-based synchronous replication, data is replicated across AZs delivering a Recovery Point Objective (RPO) of zero. Thus, even if one AZ faces disruption due to an unforeseen event, the other AZ can continue operation, ensuring uninterrupted access to critical workloads. Availability and Protection with vSANEach AZ is divided into a preferred and secondary vSAN fault domain. Under normal conditions, Virtual Machines (VMs) will use storage policies configured for dual site mirroring as well as local failures, residing in the preferred fault domain.

In case of a domain failure, vSAN powers off these VMs, and vSphere HA then powers on these VMs in the secondary fault domain. This flexibility allows administrators to apply a variety of different storage policies based on their specific requirements. |

RecognitionCategories

All

Archives

June 2024

|

RSS Feed

RSS Feed