Disruptive innovation, is a term coined by Clayton Christensen. The term describes a process by which a product or service takes root initially in simple applications at the bottom of a market and then relentlessly moves up market, eventually displacing established competitors. For example, take a look at what a company like Uber has done to the taxi service in San Francisco. They don't hire drivers like Yellow Cab. They don't own a fleet of cars. They built an application. An application that has been very disruptive to the taxi industry and is changing the landscape of ride-hailing services. Thanks to Uber, San Francisco's largest yellow cab company is filing for bankruptcy. Yellow Cab Co-op President Pamela Martinez was quoted saying that some of the financial setbacks "are due to business challenges beyond our control and others are of our own making." Yellow Cab's drivers are flocking to Uber, an app-based enterprise, lured by the promise of more riders and better schedules. Yellow Cab has been turned on its head by a disruptive innovation. Uber has disrupted the ride-hailing service industry with a lasting impact which is now moving across the county. Why do I point this out? Because, you are either being disrupted or are the disrupter. Think about that for a second. Ask Yellow Cab how it feels to be disrupted in an industry they felt very secure in before an application took over. Look at companies like Blockbuster. I bet you can tell me who disrupted them? Got it in your mind? Blockbuster in its peak in 2004 consisted of nearly 60,000 employees and over 9,000 store locations. In 2000 a fledgling company came on the seen slowly changing the landscape of the movie rental industry and eventually bankrupting Blockbuster in 2010. If you were thinking of Netflix then you are correct. Now a $28 billion dollar company, about ten times what Blockbuster was worth. Blockbuster has been greatly disrupted and is reinventing itself. You can either be disrupted or be the disrupter as with VMware. They have been a disruptive force in the technology industry from their entry with vSphere to their latest creations like SDDC, vSAN and NSX. VMware's vSphere changed the landscape of compute forever, moving cpu, memory, etc. into software, removing the dependency on hardware and has now become the most popular infrastructure management API in use today. Disruption doesn't happen overnight; Disruption happens gradually. Remember, the term "Disruptive Innovation," is taking root and relentlessly moving up the market. Uber didn't overtake Yellow Cab overnight just as with Blockbuster. A disruptor was introduced and slowly moved to overtake the industry. The same is true for vSphere. Industry leaders were hesitant to adopt such a drastically different technology but now this tried, tested and proven technology is the leader in x86 server virtualization infrastructure. VMware continues to be a disruptive force in the technology industry. Look at the movement to hyper-converged. Hyper-converged is about software, not hardware. Hyper-converged derive from being able to support all infrastructure in software, and without the need for separate dedicated hardware, such as a storage array or fibre channel switch. And, what is the core software technology in just about every hyper-converged product available today? VMware vSphere and the Software Defined Data Center.

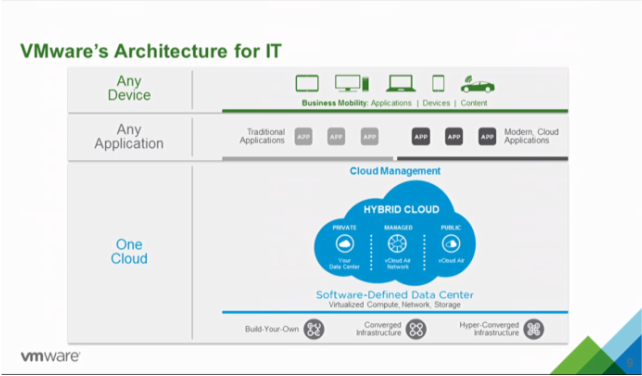

VMware is disrupting the way that we have traditionally approached the data center. Fully virtualized infrastructure, delivered on a flexible mix of private and hybrid clouds. I'm sure you have all heard the mantra, "One Cloud, Any application, Any Device." This is the next evolution in data center technology and VMware continues to lead disruptive change with products like NSX for Software Defined Networking (SDN). NSX like vSphere has had a slow adoption. I find myself having the same conversations with customers that I had when vSphere was introduced. You don't have to convince customers of the value of vSphere anymore. The speed of adoption is picking up and VMware saw an increase of threefold in the number of paying customers for its NSX network virtualization product and in Q4 of 2015 9 out of 10 VMware deals included NSX. The NSX solution is an innovative approach to solving long-standing network provisioning bottlenecks within the data center, and it allows for the integration of switching, routing and upper-layer services into an integrated application and network orchestration platform. With an overlay solution that may not require hardware upgrades, NSX offers customers a potentially quicker way of taking advantage of SDN capabilities. NSX is that disruptor in the networking industry bringing agility to existing network deployments with limited impact to existing network hardware and offering all of this without vendor lock-in. VMware NSX works across many IP-based network installations and in virtual environments running mainstream hypervisors and has established relationships with a broad set of IT vendor partners to provide integration of security and optimization solutions, as well as key network hardware players, such as Palo Alto, Arista Networks, Brocade, Dell, HP and Juniper Networks. Remember back in the beginning of this blog where I quoted President Pamela Martinez as saying that some of the financial setbacks "are due to business challenges beyond our control and others are of our own making." Some challenges were of their own making. Remember too that disruptive innovation happens over a period of time. It took 10 years for Netflix to overtake Blockbuster. Could Blockbuster have moved quicker to insue their spot as the leader in the online movie rental industry? The same is true with VMware and vSphere. This disruptive innovation took time to take hold and now it is still a driving force to change the industry with SDDC. VMware NSX is picking up steam and is in the heart of every hyper-converged to hybrid-cloud solution that companies are moving toward. The question is will you be disrupted or be part of the disruption? I want to be part of the disruption and drive change in an exciting time to be a part of this industry. Will you be disrupted or will you help disrupt? It's a call to action; To be the disruptive force that your company doesn't even know it needs because NSX will do for networking what vSphere did for compute. Disrupt or be disrupted.

1 Comment

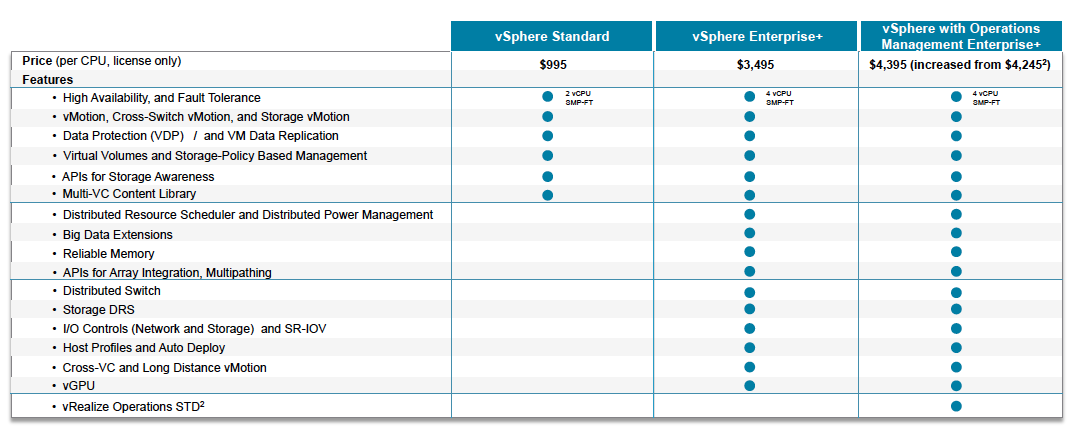

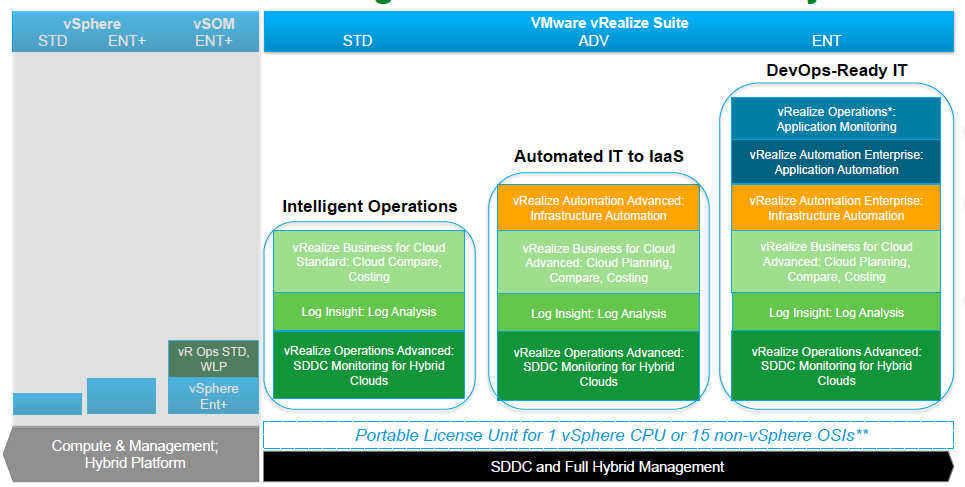

VMware this year has decided to make changes to their licensing in order to better address the needs of their customers. These new packages are tailored to the top use cases from VMware customers in both SDDC and Hybrid Cloud. You should see increased value with more features in the product editions like log insight being included with vSphere now and added portability of your licenses. New product line up for VMware vSphere and vSphere with Operations Management (vSOM) editions:

vCloud Suite (vCS) = vRealize Suite (vRS) + vSphere Enterprise Plus for vCloud Suite:

New vSphere and vSphere with Operations Management See the VMware pricing page for further information. vRealize Suite (vRS) and vCloud Suite (vCS) Update for Changes in 2016:

VMware has vastly simplified the packaging of their cloud management and compute virtualization product portfolio. See the VMware Blog for indepth information. It's that time again and I highly suggest joining in. Not only will you be a part of a great community learning new products but you'll get the chance to offer your input into the direction.

The target audience are customers who have deployed vSphere 5.5 and 6.0 in a portion of their environment. Participants are expected to:

vSphere Beta Program Overview We are excited to announce the upcoming VMware vSphere Beta Program. This program enables participants to help define the direction of the most widely adopted industry-leading virtualization platform. Folks who want to participate in the program can now indicate their interest by filling out this simple form. The vSphere team will grant access to the program to selected candidates in stages. This vSphere Beta Program leverages a private Beta community to download software and share information. We will provide discussion forums, webinars, and service requests to enable you to share your feedback with us. You can expect to download, install, and test vSphere Beta software in your environment or get invited to try new features in a VMware hosted environment. All testing is free-form and we encourage you to use our software in ways that interest you. This will provide us with valuable insight into how you use vSphere in real-world conditions and with real-world test cases, enabling us to better align our product with your business needs. shadow-table Some of the many reasons to participate in this beta opportunity:

With the advent of vSphere 6.0 Update 1 I knew it would be a matter of time until I was engaged to upgrade an environment. My customer had a small size vSphere 5.0 production environment that they wanted upgraded to 6.0. I met with them and helped to educate them on new features of 6.0 and designed an upgrade plan. The customer, due to the new features of the vCenter, wanted to migrate to the vCenter Appliance and if you are not aware, the vCenter Appliance now supports the same infrastructure as the Windows based vCenter.  Some initial challenges I came across with migrating their infrastructure came around the design decisions that were made prior to the migration. Their vCenter server had the vCenter Database installed with the Windows based vCenter server which meant that I would not be able to migrate using the VCS to VCVA Converter. If you are not familiar with the product take a look at it here. The product makes migrating a Windows based vCenter with an external database very simple. You deploy a new vCenter Appliance with the same name and IP as the current vCenter. Then you deploy the Migration Appliance. The Appliance will gather setting from the Windows vCenter. This is is then shut down and you point the appliance to the external vCenter Database. The security profiles, etc. are migrating into the new vCenter Appliance. What I LearnedThis was not my first upgrade and considering that the customer wanted to migrate to the vCenter Appliance I needed to make sure that all settings were brought over correctly. Lesson 1 - Interoperability & PlanningOne of the main areas I have seen get missed during the planning stage is performing the interoperability matrix's with vendors and VMware in order to make sure that the hardware you are upgrading is supported. Vendors have created some great online tools to perform this task although it can be time consuming it will save you troubleshooting the environment after upgrade. Lesson 2 - VMware Supported Sign Off Once you have your plan in place open a case with VMware to review the steps with you. They will take the time to review the environment and your upgrade plan to insure that you did not miss any steps. Lesson 3 - Virtual Distributed SwitchesMigrating the vDS from 5.0 to 6.0 will present challenges when migrating from a Windows based vCenter to an appliance. This can be accomplished in stages if you first upgrade to 5.5 and then export the vDS configuration and import it into the new appliance. (See KB2034602) This process obviously adds to the complexities of an upgrade of this type and depending on the size of the environment it may be the direction to go if you really feel the need to migrate to the appliance. With this particular environment being so small and no vDS in use due to license limitations I luckily didn’t have to deal with that scenario. Lesson 4 - Easy UpgradeOnce the new appliance had been deployed the migration of the hosts and vms was pretty straight forward. Disconnect the hosts from the 5.0 Windows vCenter, add the hosts to the newly created vCenter 6.0 Appliance and place them in the new cluster.

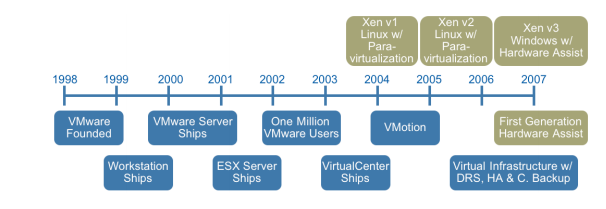

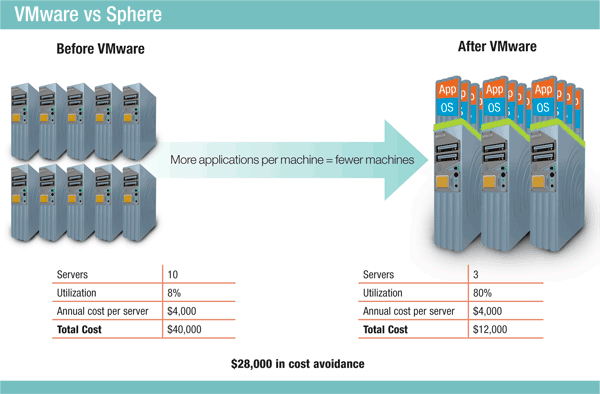

At this point it’s just a matter of upgrading hosts and vms. I created a new upgrade baseline using the ESXi 6.0 Update 1 iso and attached that to the host that required the upgrade. This is only one of many upgrade paths to 6.0. Don’t forget that you will need to have deployed the Update Manager. This is not supported on the same vm as the appliance so this will need to be installed on a separate vm. Note: The VCSA iso does not have the installer so you will need to download the the vCenter iso alongside the VCSA iso. Here we are in the future that Back to the Future predicted and I find myself contemplating what the past really looked like compared to now for IT. For those of you that live under a rock and have not seen the movies I will give a brief summarization of the second movie from the trilogy. In "Back to the Future Part II," Marty McFly travels to October 21, 2015, to save his children, yet to be born in "Back to the Future's" 1985. The movie plot is tangled by fixing one thing, McFly, Doc Brown and the villainous Biff Tannen create a number of new mishaps but what remains is the film's vision of a year that was still more than a quarter-century away when the movie was shot and released in 1989. In the IT realm of things I found myself reminiscing of what the data center looked like back in 1989 when the movie was released not to mention 1985 when the movie itself takes place. So, hold onto your hats, "Great Scott!!", we are going back to the past to revisit the data center before VMware's inception in 1998 and the impact we see today. In order to bore my reader thoroughly I will give a brief history lesson on computing but don't worry I have added plenty of pictures to stimulate your brains. So, let's fire this blog up to 88 miles per hour and get to the past. Arriving in the 1980s we find mainframe computers, whose components would take up the whole room had been joined by mini computers, where the components had been developed to such an extent everything could be housed in a single cabinet, even if these were still the size of commercial freezers. During the 1980s, computer components were developed that were smaller and more powerful until eventually the microcomputer or desktop PC came to be developed. The early ones were sold in kit form mainly to home enthusiasts. Eventually, though, these became more reliable and software was developed that meant they found their way into businesses. Eventually the ‘dumb’ terminals connected to a mainframe computer were replaced with microcomputers, each with their own processors and hard drives. However, since this segmented information, issues of data integrity and duplication soon led to the development of networks of server and client microcomputers, and the servers often ended up housed in the computer rooms either alongside or instead of the mainframes and minicomputers, often in 19” rack mounts that resemble rows of lockers. In 1985, IBM provided more than $30 million in products and support over the course of 5 years to a supercomputer facility established at Cornell University in Ithaca, New York. This is what the data center looked like in the 1980's and in the immortal words of Doc Brown, "Great Scott!". Jumping back into the delorean and taking a quick trip forward to 1990's we find the data center is still evolving and microcomputers are now called “servers”. Companies started putting up server rooms inside their company walls with the availability of inexpensive networking equipment. The biggest change in the nature of data centers comes as a result of the lifting of restrictions on the commercial use of the Internet. Companies needed fast Internet connectivity and nonstop operation to deploy systems and establish a presence on the Internet and many companies started building very large facilities to provide businesses with a range of solutions for systems deployment and operation. Enter the time of virtualization development. In 1998 VMware comes onto the scene with a patent for their virtualization platform and on February 8, 1999, VMware introduces the first x86 virtualization product, VMware Virtual Platform, based on earlier research by its founders at Stanford University. The impact of this is not fully realized at the time but this event will change the future of data centers for ever, (see the timeline below). The solution was a combination of binary translation and direct execution on the processor that allowed multiple guest OS's to run in full isolation on the same computer with readily affordable virtualization overhead. Now let's hop back into that delorean and take one last trip to where we find ourselves today. VMware started this revolution nearly 17 years ago, and is continuing to lead the industry in building out an operating system agnostic virtualization ecosystem to help companies transform their IT environments. Today there is no alternative that compares to VMware's performance, stability, ease of management, security, support, features and vast partner ecosystem. I guess the big question now is what will the future hold for us in IT? The data center is now moving into the "cloud" with again VMware leading the charge with the idea of One Cloud, Any Application. We have seen the data center shrink its footprint, hosting multiple virtual servers on an x86 platform. We have seen the transformation of the business computer or workstation with VDI. We have seen the virtualization of the storage with VSAN and VVOLs. We have seen the virtualization of the network with NSX and are seeing the transformation of companies into the cloud with vSphere Hybrid Cloud. We have also, see the transformation of applications and application mobility with containerization and virtualization of the applications.

What will the future hold for those of us lucky enough to bare witness to it? Only time will tell and I for one am off for one last adventure in the delorean to visit the future where Dell owns the world of computing. I'll let everyone know how the stock does when they go public. See you all in the future. VMware CEO Pat Gelsinger announced a new hybrid cloud strategy today along with a series of product updates, including a new version of vSphere, VSAN, VVOLs, a distribution of OpenStack and integrations of NSX with vCloudAir. The new vision laid out by VMware CEO Pat Gelsinger is one of a "Seamless and Complete Picture," of Any Device, Any Application and One Cloud. VMware spoke with their customers and found that they are looking for three key areas when it comes to IT:

With a foundation of vSphere 6.0 and new features including One Management, whether on premise or off, NSX built into vCloudAir, VSAN, and VVOLs the architecture is designed to bring a unified cloud. CEO Pat Gelsinger states that "customers increasingly need a software-defined infrastructure to enable the level of speed, agility and flexibility to respond to the challenges of IT." VMware vSphere 6.0 VMware is raising the bar again with more than 650 new features in vSphere 6.0. Some of the newly announced features include:

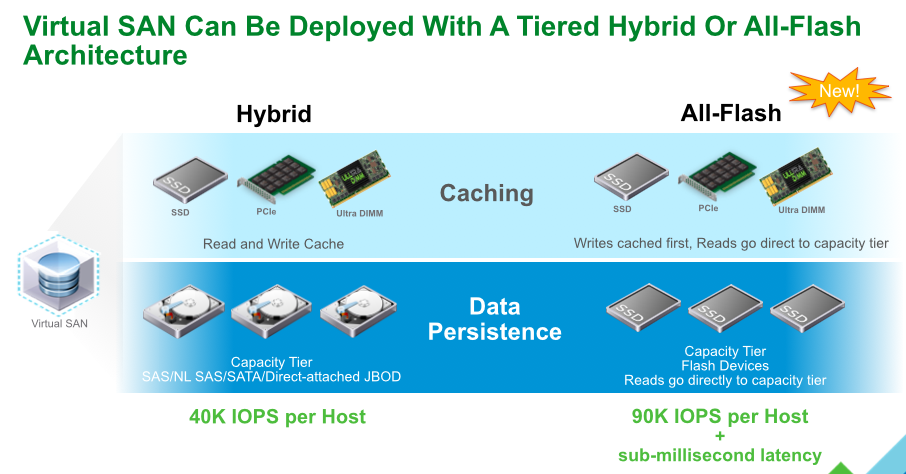

VMware VSAN With significant improvements in scale and functionality new features in VSAN include:

VVOLs vSphere Virtual Volumes enable native virtual machine awareness on third-party storage systems along with VMware's Instant Clone technology for cloning and provisioning thousands of virtual machines to make a new virtual infrastructure.

OpenStack OpenStack distribution will enable smaller IT departments with "little or no OpenStack or Linux experience" to deploy an OpenStack cloud within minutes.

VMware NSX NSX will enable customers to achieve unprecedented security and isolation in a cloud with new features and enhancements.

VMware's Any Device, Any Application and One Cloud approach lets customers utilize multiple clouds to securely accelerate IT while managing through a single environment.

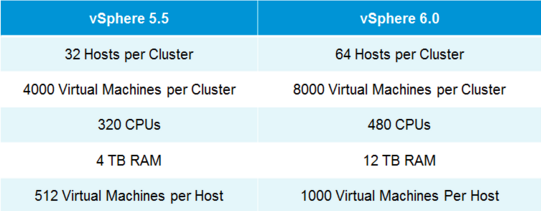

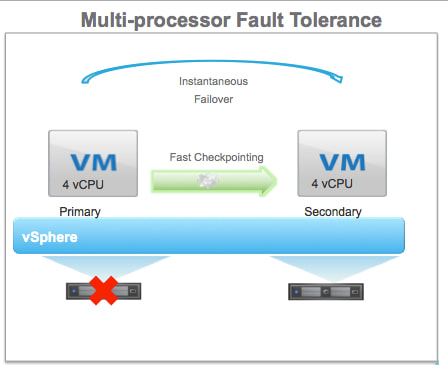

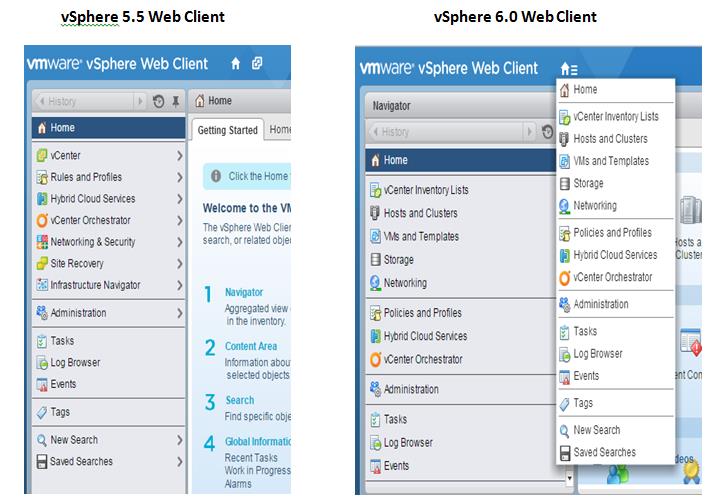

VMware vSphere 6.0 is the next major release since 5.5 and with any major release it is packed with new features and enhancements along with increased scalability. This version comes with some big improvements to VSAN which I'll discuss below. Host ImprovementsIn vSphere 5.5 the maximum supported host memory was 4TB, in 6.0 that jumps up to 12TB. Also, in vSphere 5.5 the maximum supported number of logical (physical) CPUs per host was 320 CPUs, in vSphere 6.0 that is increased to 480 CPUs. The last improvement to the hosts is the maximum number of VMs per host, increasing from 512 in 5.5 to 1000 VMs per host in 6.0 This gives the ability to create some monster VMs. Fault Tolerance IprovementsFault Tolerance (FT) was introduced in vSphere 4. FT provides protection of VMs by preventing downtime in case of a host failure. FT has never been greatly used due to its design preventing anyone that required multiple CPUs from utilizing FT. FT now supports more than one vCPU and moves from 1 vCPU to 4 vCPU support. The design of FT has changed were the way FT worked in the past you had 2 VMs on separate hosts, one as a primary and the other as the secondary. The VMs relied on shared storage. In vSphere 6.0 this has now changed allowing for each VM to have their own virtual disk that can be located on different storage. VMware also improved the prior limitations with snapshot support for FT. Having separate disks helped with this issue. Now that there is built in support for snapshotting a FT VM, you can back it up. Prior to this admins found themselves challenged with how to perform agentless backup while maintaining FT for the VMs requiring it. In order to backup the VMs requiring FT prior to 6.0 you had to install a backup agent and backup the vm in a more traditional manner. vSphere Web Client ImprovementsThe web client has never been a favorite of admins and with the release of vSphere 6.0 VMware has made some great performance and usability improvements. Performance Improvements:

Usability Improvements:

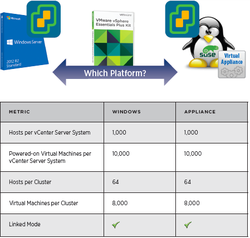

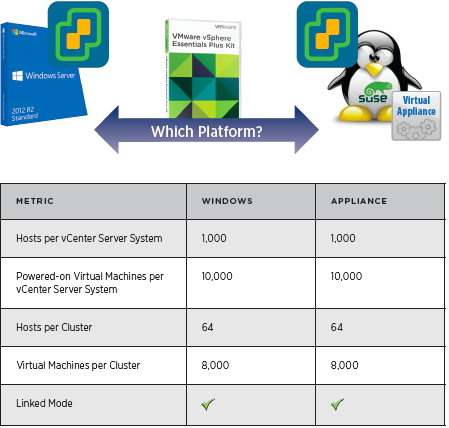

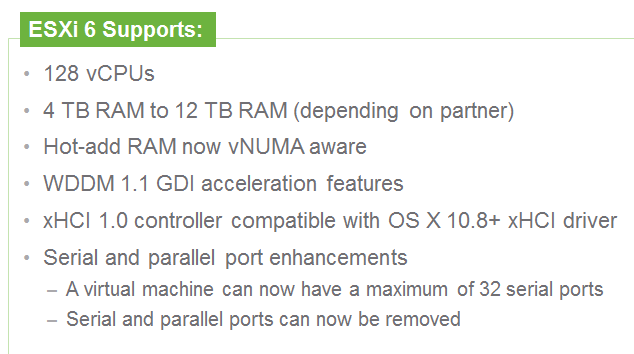

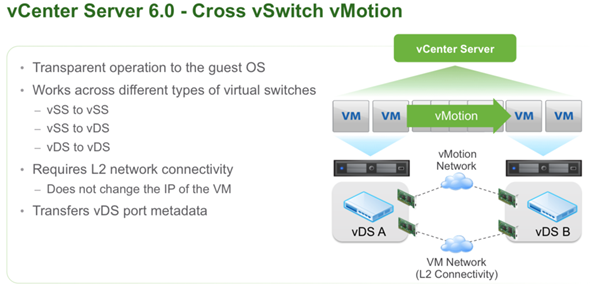

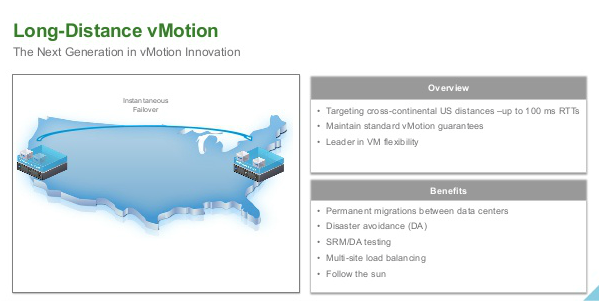

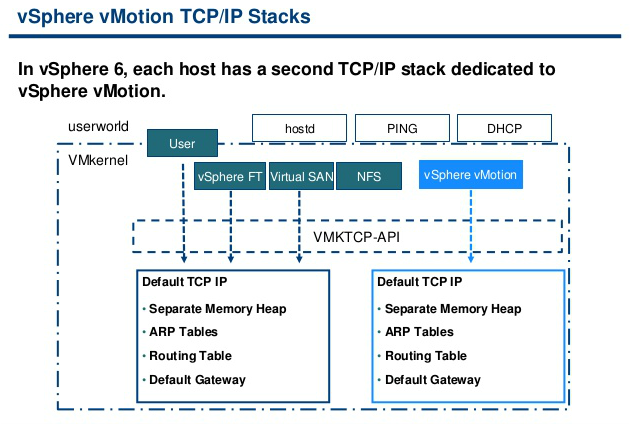

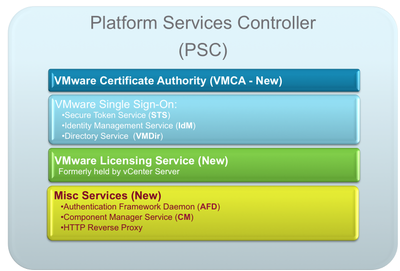

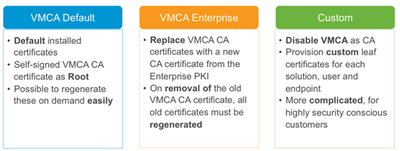

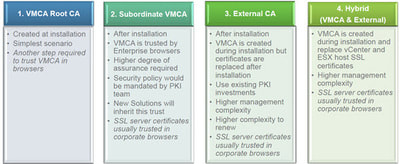

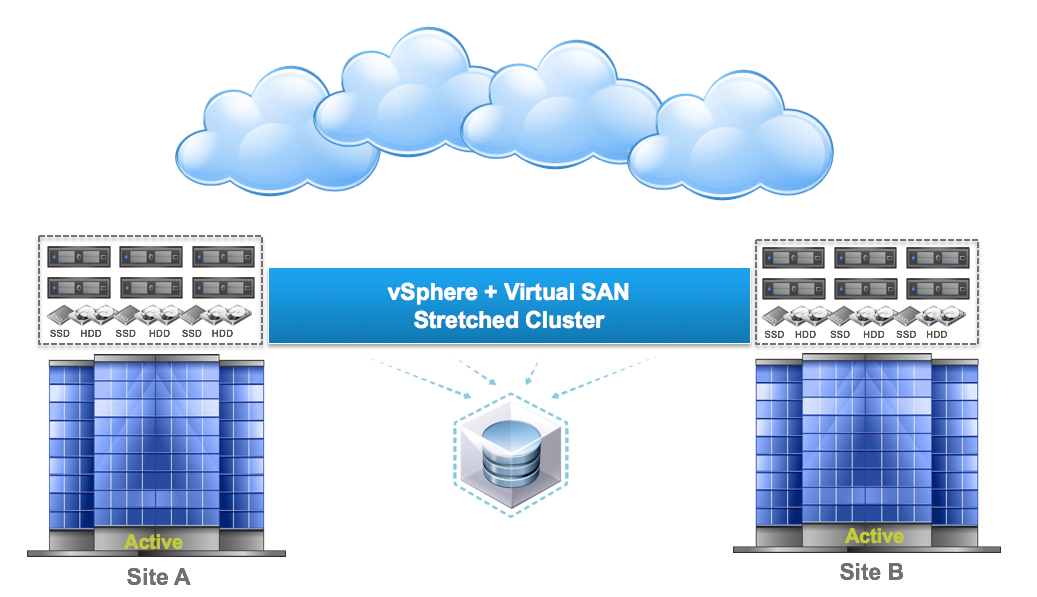

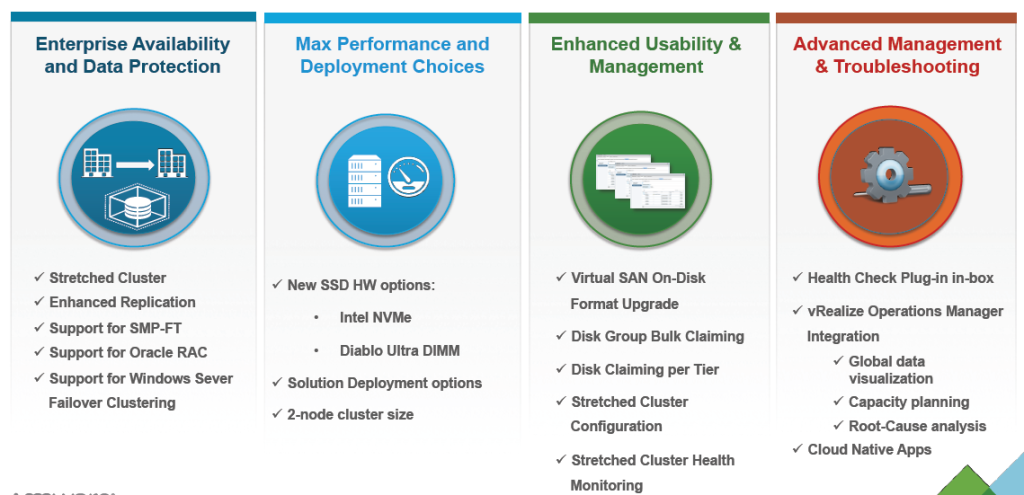

vCenter Server Appliance ImprovementsI have never been a fan of having to burn Microsoft Licenses in order to deploy vCenter or the amount of time it takes to spin up a Windows OS to support it for both the application and SQL database. I loved it when VMware came out with the appliance for an easy ova deployment but the limitations in scalability and support have been the main driving factor for admins to not deploy this in their environments. now in vSphere 6.0 the VCSA is fully scalable to the same limits that the vCenter Server on Windows scales to. This now means that the VCSA can support 1,000 hosts, 10,000 VMs and now linked mode. VM Improvements in 6.0Back in vSphere 5.5 a VM could be configured with up to 64 vCPUs and now in 6.0 that maximum has doubled to 128 vSPCUs. That is a crazy amount of vCPU. Another increase for the VM is around serial ports. VMware saw a need for this and has increased from 4 to 32 ports that can be configured on a single VM. If you don't have a need for them then you can remove the serial ports and parallel ports as needed. vMotion!!There have been a lot of new enhancements with vMotion technology to increase the range and capabilities of vMotioning a VM. You can now vMotion a VM between different types of virtual switches. VMs can be moved from a Standard vSwitch to a Distributed vSwitch without changing its IP address and without network disruptions. With the improvements to vMotion you can now vMotion a VM from a host located in one vCenter server to another host on a different vCenter server. There is no need for common shared storage between the the hosts and vCenter servers. This eliminates the traditional distance boundaries of vMotion. You can now move between data centers, regional data centers or continental. Another improvement to vMotion resides around latency requirements. In vSphere 5.5 the maximum vMotion latency was 10ms. In vSphere 6.0 that has increased to 100ms of latency. This allows for the vMotion of VMs between longer distances. VMware vMotion also includes its own TCP/IP stack which can cross layer 3 networks. Single Sign-on Improvementsn vSphere 6.0 VMware introduces a new Platform Services Controller. The controller groups together SSO, Licensing and Certificate authority. You can deploy this as embedded with vCenter server or external so that is completely independent of the vCenter server. Certificate management has always been a pain for Administrators. With the introduction of VMCA, you can now manage the provisioning and deployment of certificates for you vCenter servers, and ESXi hosts. vSANNow in its 3rd release as of August of 2015, VSNA 6.1 has already seen adoption across different industries and sizes. This release adds abilities around availability data protection and management. Virtual SAN Stretched Cluster allows admins to create a stretched cluster between two or more geographically separated data centers. Another feature of this release is Virtual SAN for Remote Office or Branch Offices. This provides the ability to deploy VSAN clusters for ROBO. You can now deploy large numbers of 2-node VSAN clusters that can be centrally managed from a central data center through one vCenter server.

Some other new features include:

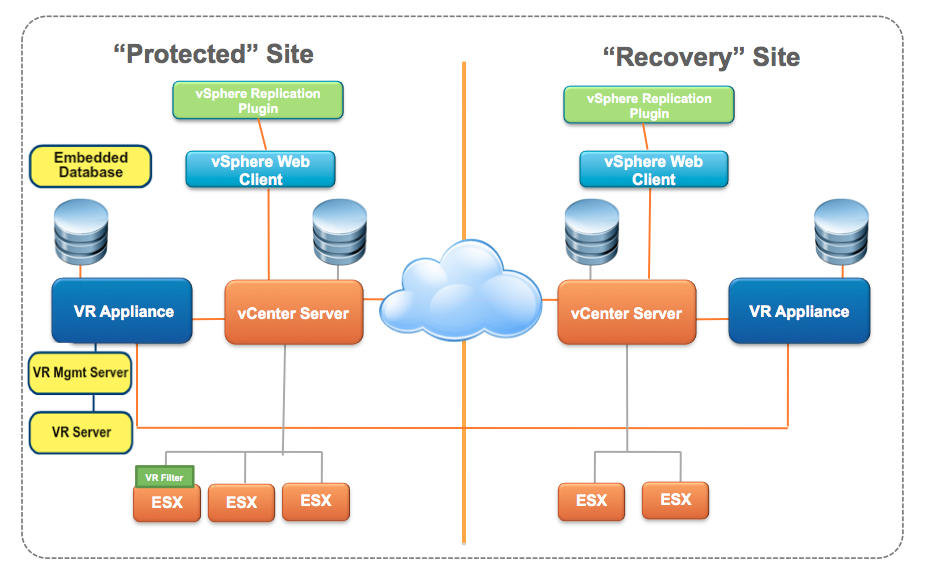

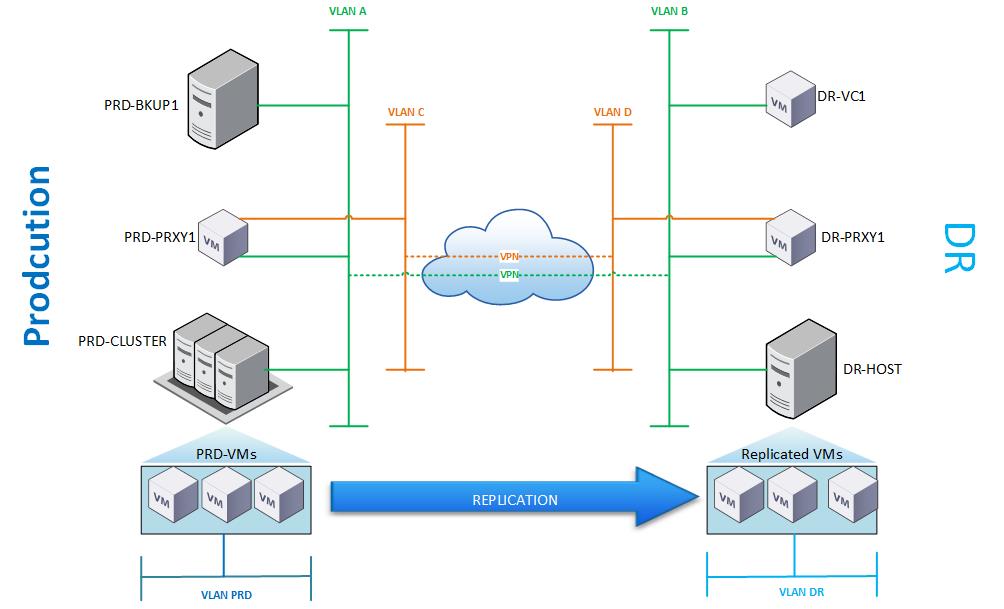

VMware introduced replication in vSphere 5.5. The biggest limitation is that it only provides a single restore point only. This is an immediate show stopper for most customers. Multiple restore points are absolutely essential, because just like "good" data, any corruption/virus/dataloss from the source VM is immediately replicated to target VM, and if you don't spot the problem and perform failover to replica fast enough (before the next replication cycle) - which is going to be impossible in most cases - then you are done. Other limitations • No failback • No traffic compression • No traffic throttling • No swap exclusion • No network customization (network mapping) • No re-IP upon failover • Minimum possible RPO is 15 minutes • Basic VSS quiescing (no application-aware processing) • Works within single vCenter only • No ability to create container-based jobs (explicit VM selection only) • Limited seeding options: cannot seed from backup, or using different VM as a seed (disk IDs have to match) • Different ports for initial and incremental sync required • No good reporting Also, be aware that biggest marketing push around vSphere replication is technically incorrect statement! “Unlike other solutions, enabling vSphere replication on a VM does not impact I/O load, because it does not use VM snapshots” It is simply impossible to transfer specific state of running VM without some sort of snapshot even in theory! In reality, during each replication cycle they do create hidden snapshot to keep the replicated state intact, just different type of snapshot (exact same concept as Veeam reversed incremental). PROS: No commit required, snapshot is simply discarded after replication cycle completes. CONS: While replication runs, there is 3x I/O per each modified block that belongs to the replicated state. This is the I/O impact that got lost in marketing. Unlike VMware replication Veeam takes advantage of multiple restore points. For every replica, Veeam Backup & Replication creates and maintains a configurable number of restore points. If the original VM fails for any reason, you can temporary or permanently fail over to a replica and restore critical services with minimum downtime. If the latest state of a replica is not usable (for example, if corrupted data was replicated from source to target), you can select previous restore point to fail over to. Veeam Backup & Replication utilizes VMware ESX snapshot capabilities to create and manage replica restore points. Replication of VMware VMs works similarly to forward incremental backup. During the first run of a replication job, Veeam Backup & Replication copies the original VM running on the source host and creates its full replica on the target host. You can also seed this initial copy at the target site. Unlike backup files, replica virtual disks are stored uncompressed in their native format. All subsequent replication job runs are incremental (that is, Veeam Backup & Replication copies only those data blocks that have changed since the last replication cycle). Conclusion:

Veeam Replication really stands out on top of the feature lacking VMware Replication. The numerous missing features like taking advantage of multiple snapshot replications, to help insure data integrity, no failback, no traffic throttling and no traffic compression etc., translate to only using VMware replication for simple use cases.

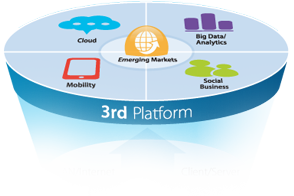

a Mobile Cloud model. He says that it's "Technology that disrupts and bridges to the mobile cloud". Strategically aligned businesses, each are focused and free to execute individually or together. The Federation provides customer solutions and choice for the software-defined enterprise and the emerging “3rd platform” of mobile, cloud, big data and social, transformed by billions of users and millions of apps and according to CEO David Goulden, the common vision is "To move from the 2nd platform to the 3rd". Each company plays a significant role in that vision. This federation offers 5 areas of value:

The idea behind Federation Solutions is simple: shorten time-to-value and reduce implementation risk for these big enterprise IT agenda items. Microsoft is finally realizing the future and is coming out to support that by changing its desktop licensing model to support it. You can see that here.

With the growing need to migrate from Windows XP and offer more flexibility, customers will now feel confident that they can explore the growing variety of options available to them, be it cloud apps, virtualization, DaaS, clones and other delivery methods, to create their next generation workspaces. |

RecognitionCategories

All

Archives

April 2024

|

RSS Feed

RSS Feed