I just recently passed my VCP6-NV and wanted to take some time to blog about the experience and to gather together some resources for those that are looking to pursue this certification. For those you that may not know much about NSX I will start with a brief introduction and explain why I feel that you should pursue this certification for your company. What is NSX? VMware NSX is the next evolution in software defined everything. It is VMware's network virtualization and security software platform that came from an acquisition of Nicira back in 2012. What does NSX do? NSX de-couples the network functions from the physical network devices in your data center, in a way that analogous to decoupling virtual servers from the physical. NSX natively creates the traditional network constructs in the virtual realm. These include ports, switches, routers, firewalls, load balancers, etc. I could write an entire blog just on the features of NSX and the integrations with other third party vendors, such as Palo Alto Networks and Trend Micro; oh wait I did. You can read that in my blog here. But, that is not what this blog is about so let's move on. The VMware Certified Professional Network Virtualization exam, tests candidates on their knowledge and abilities to demonstrate basic virtualization networking skills such as vSwitch, vDistributed Switches, installation & configuration of NSX, and finally administration of NSX. In order to pass the exam you will need to have in depth understanding of these areas. Hands on with both NSX and vSphere are highly recommended. In fact, I believe that VMware recommends at least 6 months of hands-on. I would recommend setting aside dedicated time to go over the following resources along with practicing packet walks and architecture design. These are the resources that I used to study for the exam over a period of 6 months.

Exam Objectives: Section 1 – Define VMware NSX Technology and Architecture

The test consists of 80+ questions in which you have approximately 1 minute per question, which doesn't seem like a lot of time but it is plenty. You can also mark questions for review.. I found that once I completed the exam I had enough time to go back through all the questions once more to check for anything I missed. So, now that I have reviewed what NSX is and discussed the exam the next question is why should you take the exam? Besides certifications being a great way to show value to your company more importantly is that NSX is the next big wave in the virtual realm. I chose to take this exam because I believe that NSX is the next step in virtualizing the datacenter and I wanted to be on the forefront to help lead the direction for my company and our customers. I have the same excitement with NSX that I felt when I first became engaged with ESX. Since taking the exam, I have been between Buffalo and Albany NY, speaking to customers and white boarding their environments. This has lead to better engagements with customers and within VMUG (VMware User Group) where I lead three groups, Albany now Capital District, Syracuse and Rochester. NSX will change the face of networking just as vSphere did for physical servers. If you want to help drive the future direction of your company and help them become more secure, agile and flexible or if your company, like many others, are in the process of developing their cloud strategy then NSX can play a large role in that.

0 Comments

Bringing VMware NSX and Horizon together

Virtual desktop infrastructure (VDI) has become an even more popular virtualization option for many organizations and VMware customers. VMware continues to work with partners to advance the protection of VDI deployments. Most recently the focus has been on introducing advanced security controls with VMware NSX (network virtualization platform) and Horizon 6 (VDI) environment. VDI in combination with NSX offers organizations the chance to make huge leaps forward in the security and management of their virtualized desktop deployments. Two big challenges that have slowed the adoption of large-scale desktop virtualization in the past are:

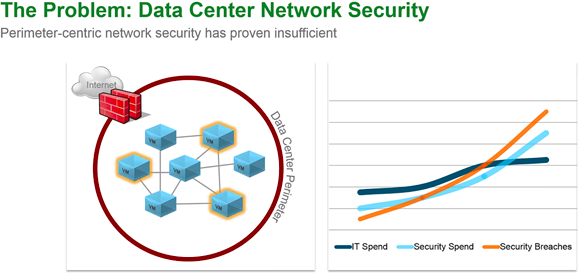

NSX addresses these concerns and much more. Security for VDI deployments is more critical because of the need to limit “east-west traffic,” the internal traffic in the data center. However, “east-west traffic” isn’t monitored well, if at all, by traditional perimeter defenses. For example a basic surfing or email mistake by a trusted end user could bring a threat right past those defenses into your data center resulting in a breach. VMware NSX with Horizon enables micro-segmentation and automates the deployment and provisioning processes. This allows for the insertion of advanced security services from third parties that includes:

This provides instant, automated protection as soon as a new virtual desktop is spun up. NSX brings security inside the data center with automated fine-grained policies tied to the virtual machines, while its network virtualization capabilities let you create entire networks in software, without touching the underlying physical infrastructure

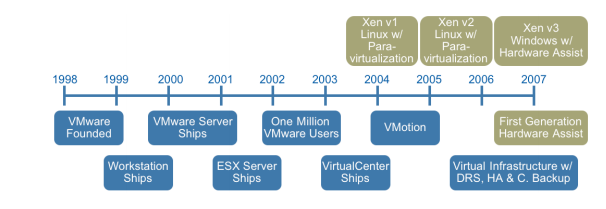

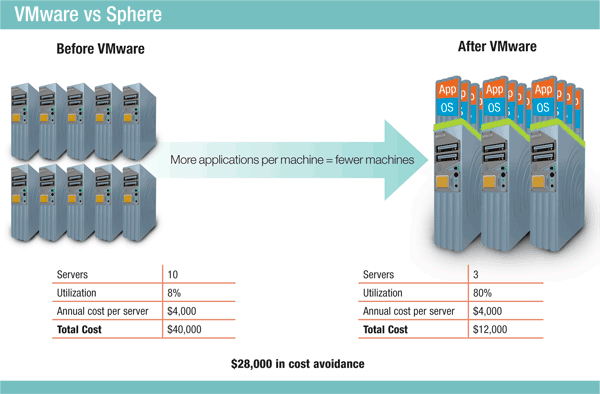

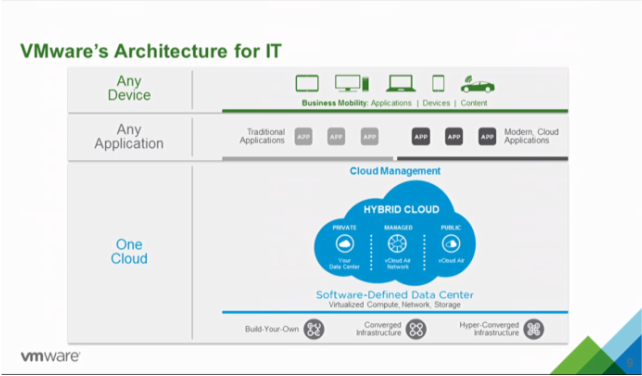

Here we are in the future that Back to the Future predicted and I find myself contemplating what the past really looked like compared to now for IT. For those of you that live under a rock and have not seen the movies I will give a brief summarization of the second movie from the trilogy. In "Back to the Future Part II," Marty McFly travels to October 21, 2015, to save his children, yet to be born in "Back to the Future's" 1985. The movie plot is tangled by fixing one thing, McFly, Doc Brown and the villainous Biff Tannen create a number of new mishaps but what remains is the film's vision of a year that was still more than a quarter-century away when the movie was shot and released in 1989. In the IT realm of things I found myself reminiscing of what the data center looked like back in 1989 when the movie was released not to mention 1985 when the movie itself takes place. So, hold onto your hats, "Great Scott!!", we are going back to the past to revisit the data center before VMware's inception in 1998 and the impact we see today. In order to bore my reader thoroughly I will give a brief history lesson on computing but don't worry I have added plenty of pictures to stimulate your brains. So, let's fire this blog up to 88 miles per hour and get to the past. Arriving in the 1980s we find mainframe computers, whose components would take up the whole room had been joined by mini computers, where the components had been developed to such an extent everything could be housed in a single cabinet, even if these were still the size of commercial freezers. During the 1980s, computer components were developed that were smaller and more powerful until eventually the microcomputer or desktop PC came to be developed. The early ones were sold in kit form mainly to home enthusiasts. Eventually, though, these became more reliable and software was developed that meant they found their way into businesses. Eventually the ‘dumb’ terminals connected to a mainframe computer were replaced with microcomputers, each with their own processors and hard drives. However, since this segmented information, issues of data integrity and duplication soon led to the development of networks of server and client microcomputers, and the servers often ended up housed in the computer rooms either alongside or instead of the mainframes and minicomputers, often in 19” rack mounts that resemble rows of lockers. In 1985, IBM provided more than $30 million in products and support over the course of 5 years to a supercomputer facility established at Cornell University in Ithaca, New York. This is what the data center looked like in the 1980's and in the immortal words of Doc Brown, "Great Scott!". Jumping back into the delorean and taking a quick trip forward to 1990's we find the data center is still evolving and microcomputers are now called “servers”. Companies started putting up server rooms inside their company walls with the availability of inexpensive networking equipment. The biggest change in the nature of data centers comes as a result of the lifting of restrictions on the commercial use of the Internet. Companies needed fast Internet connectivity and nonstop operation to deploy systems and establish a presence on the Internet and many companies started building very large facilities to provide businesses with a range of solutions for systems deployment and operation. Enter the time of virtualization development. In 1998 VMware comes onto the scene with a patent for their virtualization platform and on February 8, 1999, VMware introduces the first x86 virtualization product, VMware Virtual Platform, based on earlier research by its founders at Stanford University. The impact of this is not fully realized at the time but this event will change the future of data centers for ever, (see the timeline below). The solution was a combination of binary translation and direct execution on the processor that allowed multiple guest OS's to run in full isolation on the same computer with readily affordable virtualization overhead. Now let's hop back into that delorean and take one last trip to where we find ourselves today. VMware started this revolution nearly 17 years ago, and is continuing to lead the industry in building out an operating system agnostic virtualization ecosystem to help companies transform their IT environments. Today there is no alternative that compares to VMware's performance, stability, ease of management, security, support, features and vast partner ecosystem. I guess the big question now is what will the future hold for us in IT? The data center is now moving into the "cloud" with again VMware leading the charge with the idea of One Cloud, Any Application. We have seen the data center shrink its footprint, hosting multiple virtual servers on an x86 platform. We have seen the transformation of the business computer or workstation with VDI. We have seen the virtualization of the storage with VSAN and VVOLs. We have seen the virtualization of the network with NSX and are seeing the transformation of companies into the cloud with vSphere Hybrid Cloud. We have also, see the transformation of applications and application mobility with containerization and virtualization of the applications.

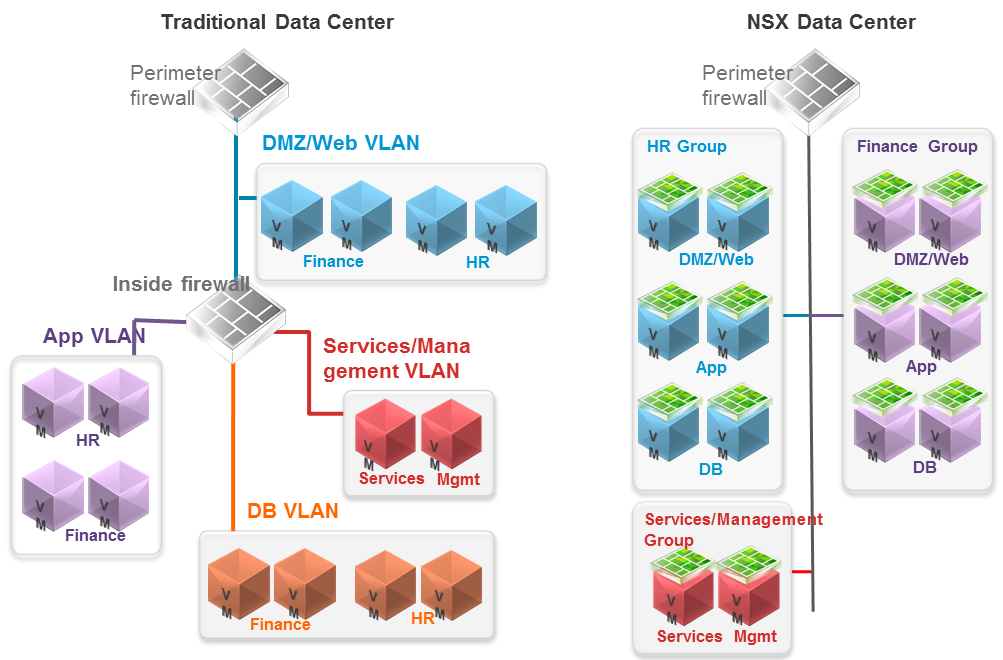

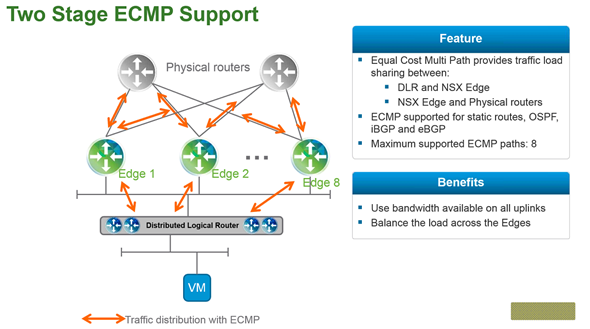

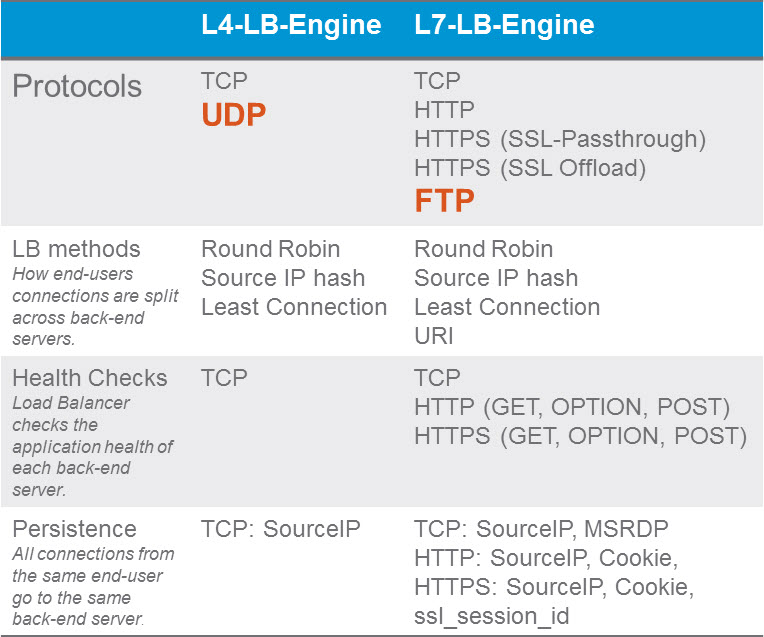

What will the future hold for those of us lucky enough to bare witness to it? Only time will tell and I for one am off for one last adventure in the delorean to visit the future where Dell owns the world of computing. I'll let everyone know how the stock does when they go public. See you all in the future. Segmentation It can be extremely difficult to secure and maintain security in your production environment. The traditional security model focuses on the perimeter defense but continued security breaches show that this model is not affective. Firewall can be deployed at each VM, but that proves to be unmanageable. With NSX 6.1 it is possible to micro segment your network, so that only traffic that is allowed can travel through the network between virtual machines. Traditionally, network segmentation is a function of a physical firewall or router, designed to allow or deny traffic between network segments or tiers. The traditional processes for defining and configuring segmentation are time consuming and highly prone to human error, resulting in a large percentage of security breaches. Implementation requires deep and specific expertise in device configuration syntax, network addressing, application ports and protocols. Network segmentation is a core capability of NSX. A virtual network can support a multi-tier network environment, meaning multiple L2 segments with L3 segmentation or micro-segmentation on a single L2 segment using distributed firewall rules. These could represent a web tier, application tier and database tier. Physical firewalls and access control lists deliver a proven segmentation function, trusted by network security teams and compliance. Confidence in this approach for cloud data centers, however, has been shaken, as more and more attacks, breaches and downtime are attributed to human error in to antiquated, manual network security provisioning and change management processes. In a virtual network, network services (L2, L3, ACL, Firewall, QoS etc.) that are provisioned with a workload are programmatically created and distributed to the hypervisor vSwitch. Network services, including L3 segmentation and fire-walling, are enforced at the virtual interface. Communication within a virtual network never leaves the virtual environment, removing the requirement for network segmentation to be configured and maintained in the physical network or firewall. Two Stage ECMP Equal cost multi path (ECMP) routing for distributed logical routers and NSX Edges has been added to 6.1. This gives NSX the ability to support ECMP between the Distributed Logical Router (DLR) and the NSX Edges to the physical network. This is limited to 8 ECMP paths. With this new feature you can better utilize bandwidth by way of multiple NSX Edges and their respective uplinks. Load Balancing Every NSX Edge has the ability to provide load balancing which can provide support for load balancing policies of IP hash, least connection, round robin and URI. NSX 6.1 includes the ability to load balance TCP, UDP and FTP. Firewall Enhancements

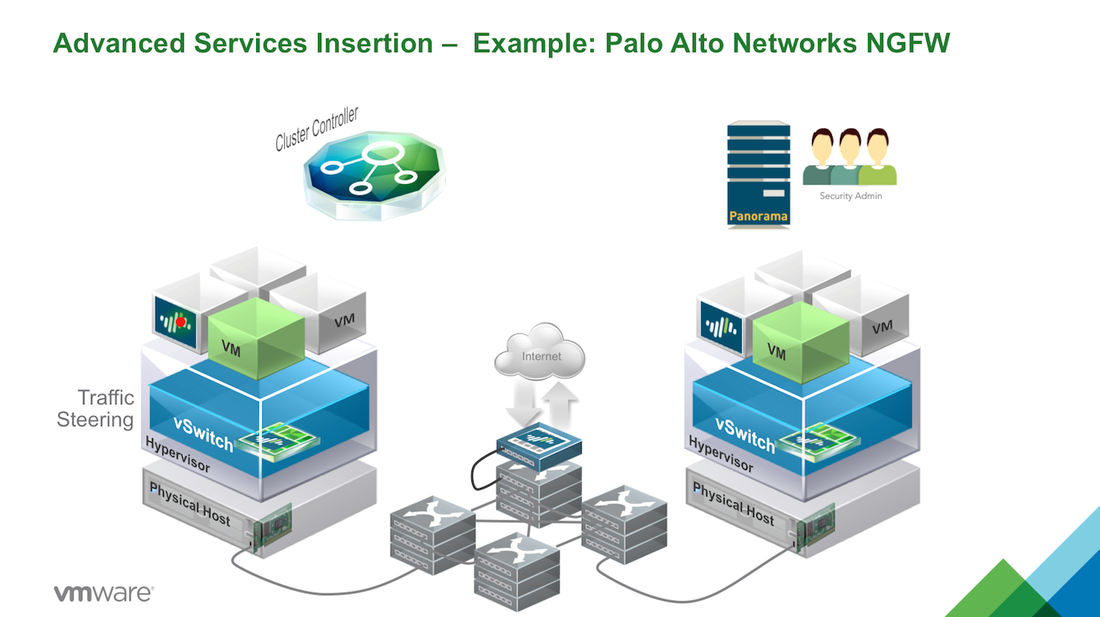

VMware NSX platform to distribute the Palo Alto Networks VM-Series next generation firewall, making the advanced features locally available on each hypervisor. Network security policies, defined for applications workloads provisioned or moved to that hypervisor, are inserted into the virtual network’s logical pipeline. At runtime, the service insertion leverages the locally available Palo Alto Networks next-generation firewall feature set to deliver and enforce application, user, context-based controls policies at the workloads virtual interface. VMware NSX provides a platform that allows automated provisioning and context-sharing across virtual and physical security platforms. Combined with traffic steering and policy enforcement at the virtual interface, partner services, traditionally deployed in a physical network environment, are easily provisioned and enforced in a virtual network environment, VMware NSX delivers customers a consistent model of visibility and security across applications residing on both physical or virtual workloads.

VMware CEO Pat Gelsinger announced a new hybrid cloud strategy today along with a series of product updates, including a new version of vSphere, VSAN, VVOLs, a distribution of OpenStack and integrations of NSX with vCloudAir. The new vision laid out by VMware CEO Pat Gelsinger is one of a "Seamless and Complete Picture," of Any Device, Any Application and One Cloud. VMware spoke with their customers and found that they are looking for three key areas when it comes to IT:

With a foundation of vSphere 6.0 and new features including One Management, whether on premise or off, NSX built into vCloudAir, VSAN, and VVOLs the architecture is designed to bring a unified cloud. CEO Pat Gelsinger states that "customers increasingly need a software-defined infrastructure to enable the level of speed, agility and flexibility to respond to the challenges of IT." VMware vSphere 6.0 VMware is raising the bar again with more than 650 new features in vSphere 6.0. Some of the newly announced features include:

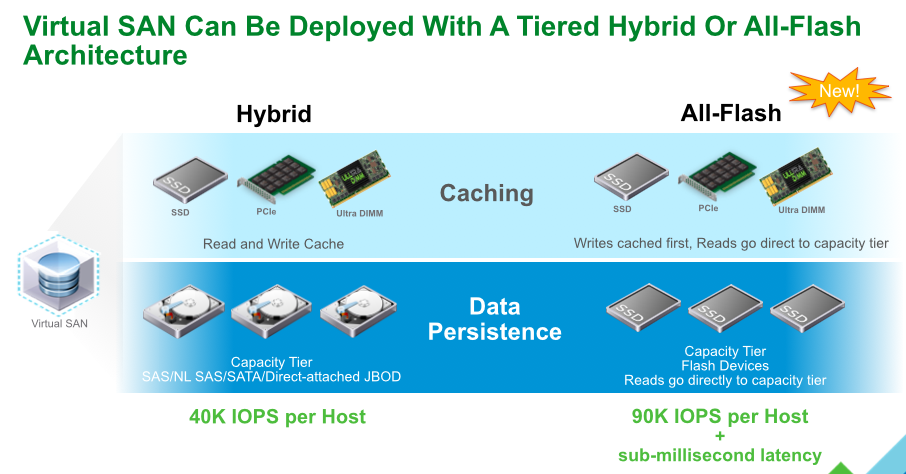

VMware VSAN With significant improvements in scale and functionality new features in VSAN include:

VVOLs vSphere Virtual Volumes enable native virtual machine awareness on third-party storage systems along with VMware's Instant Clone technology for cloning and provisioning thousands of virtual machines to make a new virtual infrastructure.

OpenStack OpenStack distribution will enable smaller IT departments with "little or no OpenStack or Linux experience" to deploy an OpenStack cloud within minutes.

VMware NSX NSX will enable customers to achieve unprecedented security and isolation in a cloud with new features and enhancements.

VMware's Any Device, Any Application and One Cloud approach lets customers utilize multiple clouds to securely accelerate IT while managing through a single environment.

|

RecognitionCategories

All

Archives

April 2024

|

RSS Feed

RSS Feed