|

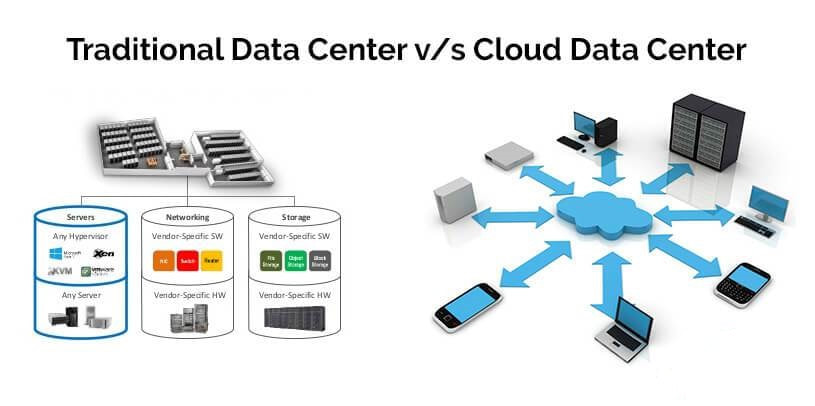

The movement toward a hybrid cloud, software defined data center, has been on-going for years now. We have seen the virtualization of compute, storage, and now networking. In this blog, I will be discussing this journey: where we started, where we are going, and why you want to be on this journey. Traditional data center models are still very prevalent and accepted by organizations as the defacto model for their data center(s). If you have ever managed a traditional data center model, then you know the surmounting challenges we face within this model. What comprises the traditional data center model? A traditional data center model can be described as heterogeneous compute, physical storage, and networking managed by disperse teams all with a very unique set of skills. Applications are typically hosted in their own physical storage, networking, and compute. All these entities-physical storage, networking, and compute- increase with the growth in size and number of applications. With growth, complexity increases, agility decreases, security complexities increase, and assurance of a predictable and repeatable production environment, decrease. Characterizations of a Traditional Data Center:

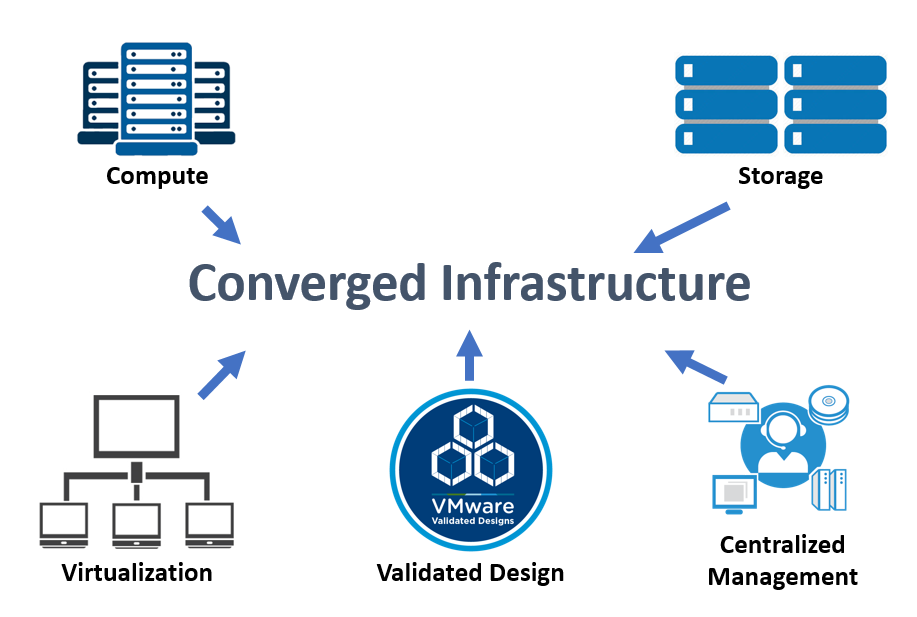

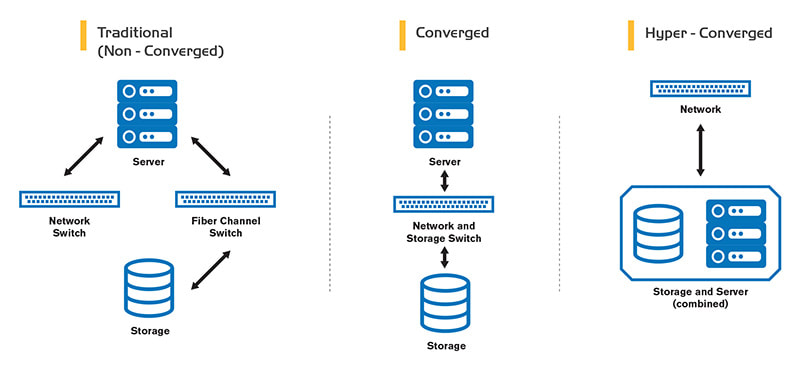

Challenges around supporting these complex infrastructures can include things like slow time to resolution when an issue arises due to the complexities of a multi-vendor solution. Think about the last time you had to troubleshoot a production issue. In a typical scenario, you are opening multiple tickets with multiple vendors. A ticket with the network vendor, a ticket with the hyper-visor vendor, a ticket with the compute vendor, a ticket with the storage vendor, and so on and so on. Typically, all pointing fingers at each other when we all know that fault always lies with the database admins. The challenges aren't just around the complexities of design, day to day support, or administration, but also include challenges around lifecycle management. When it comes to lifecycle management, we are looking at the complexities around publishing updates and patches. If you are doing your due diligence, then you are gathering and documenting all the firmware, bios, and software from all the hardware involved for the update/patch and comparing that information against Hardware Compatibility Lists and Interoperability Lists to ensure that they are in a supported matrix. If not, then you have to update before going any further. This can be extremely time consuming and we are typically tasked with testing in a lab that doesn't match our production environment(s) ensuring we don't bring any production systems down during the maintenance window. The first attempt at reducing the complexities we face with the traditional model was when we witnessed the introduction of converged infrastructure. Converged introduced us to a pizza delivery model for infrastructure. Meaning, we gather our requirements, place an order, and have it delivered ready to be consumed on premise. This new model to infrastructure brought with it a reduction in complexities that are inherent with the traditional model. What is converged infrastructure? Converged infrastructure is an approach to data center management that packages compute, storage, and virtualization on a pre-integrated, pre-tested, pre-validated, turnkey appliance. Converged systems include a central management software. These pre-built appliances reduce concerns with support issues due to the fact that the vendor supports the entire stack. You gain that "one throat to choke" when issues arise. You are no longer required to open multiple tickets with multiple vendors. One call to the supporting vendor and they handle troubleshooting for the hyper-visor, compute, and storage. This can increase resolution time when issues present themselves. You gain a reduction in data center footprint which, in turn, reduces power and cooling costs. I worked with a customer and reduced their multi-rack traditional data center to a single rack solution. The cost savings were tremendous, as they were able to reduce the costs of not only the power and cooling, but also the space they paid for at the collocation. With converged, you also gain a reduction in lifecycle management. When an update comes out from the vendor, they have already pre-validated and pre-tested the update/patch and know how it will affect your production environment. This means that you can gain back all the time it takes for you to check the firmware, bios, and software against the HCL, etc. This can be a tremendous benefit allowing you to deploy new updates/patches with assurance. VMware Validated Designs was also introduced to provide comprehensive and extensively-tested blueprints to build and operate a Software-Defined Data Center. With the VMware Validated Designs, VMware also allows for more flexibility with a build your own solution. Think of Validated Design as a prescriptive method to SDDC. You follow the detailed guides and are ensured of a specific outcome. Unlike the vendor pre-validating and pre-testing the solution, then building it for you in an appliance approach, VMware handles everything but the build. This approach has four benefits:

The converged model does still present some challenges. You may not be able to move to the latest hyper-visor software when it comes out but most don't like to be the guinea pig anyway. Another challenge is with storage. Although storage is packaged and supported in this model, you still have to manage it as with traditional storage arrays. For example, if you need to build a new VM, typically we need to:

To further simplify the traditional model of infrastructure, VMware brought us the Software Defined Data Center (SDDC) vision with the hyper-converged model. What is hyper-converged infrastructure (HCI)? Hyper-converged infrastructure allows the convergence of physical storage onto industry-standard x86 servers, enabling a building block approach with scale-out capabilities. All key data center functions run as software on the hyper-visor in a tightly integrated software layer, delivering services that were previously provided via hardware through software. Reducing the complexities of traditional storage administration while taking the intelligence of the array and bringing it into the software layer. Take the previous example above. Now, when we provision a VM, the storage is provisioned along with it. There is no need to log into the storage array and provision the LUN, or zoning and masking, to present the newly created storage to the hyper-visor environment. Management of the storage is performed through the vCenter server web interface that you use to manage the rest of the hyper-visor environment. The hyper-converged environment further reduces the footprint at our data center(s) and the complexities we have in both traditional and converged environments. This new model of deploying an infrastructure gains us five benefits:

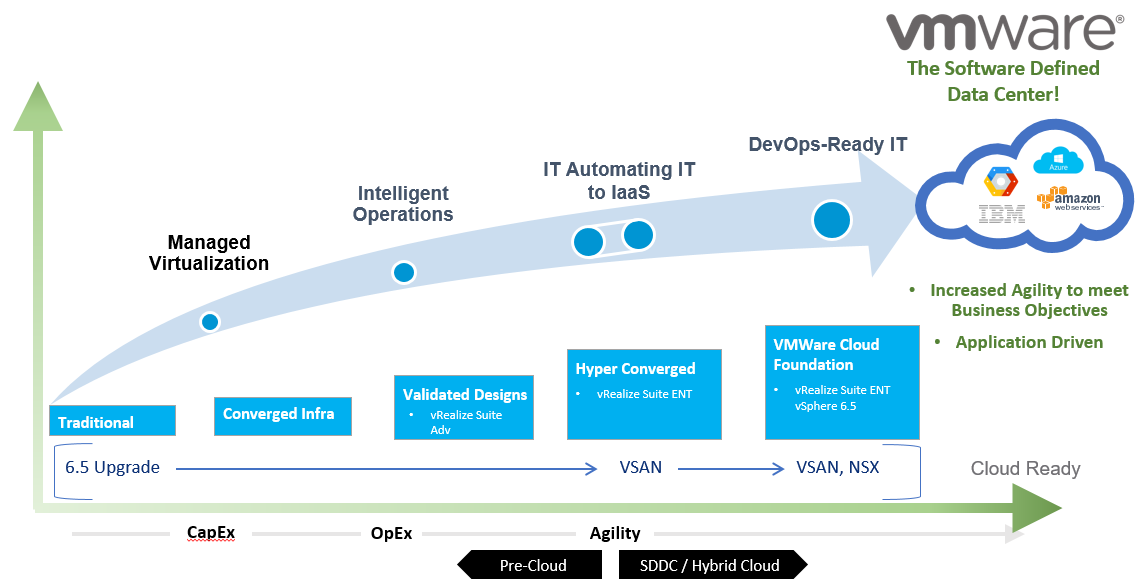

With hyper-converged, we have moved compute and storage into software defined. This simplifies the environment while gaining all the benefits from a converged infrastructure. To recap, we have talked about where we began with the traditional data center model and all the challenges listed above with administering a traditional environment. Along with all the added benefits of converged and now hyper-converged infrastructures. Remember, that at this point, we have software defined the compute and the storage, but what about the network? In 2012, VMware acquired Nicira and one year later introduced network virtualization with NSX. To further the SDDC vision of an all software defined data center, VMware virtualized the network. We now have compute, storage, and networking in the software stack. This year at VMworld 2017, VMware introduced the next logical iteration to the journey of SDDC with VMware Cloud Foundations. VMware Cloud Foundations, encompasses the best of VMware Validated Design and all the benefits of hyper-converged. It brings the three software defined solutions, compute, storage, and networking into a single packaged managed by the SDDC Manager. I wrote a previous blog about VMware Cloud Foundations you can find here to gain more insight.

Why do we want to be on this journey? VMware Cloud Foundation provides the simplest way to build an integrated hybrid cloud. They do this by providing a complete set of software defined services for compute, storage, network, security and cloud management. Allowing the user to run enterprise apps- traditional or containerized- in private or public environments along with being easy to operate with built-in automated lifecycle management. This new model has four use cases:

To begin your journey toward this new infrastructure model and future proofing your data center for cloud, you begin with upgrading your current vSphere 5.x environment to 6.5. By upgrading to vSphere 6.5, you put your current infrastructure in an optimal place to take advantage of the latest vSAN and NSX deployments along with the following benefits you gain from the new features in 6.5. Benefits of vSphere 6.5:

As you can see from the picture above the journey doesn't end with VMware Cloud Foundation but continues to progress toward the true hybrid-cloud solution that was announced this year out at VMworld 2017. The new announcement was a new partnership between VMware and Amazon. This new offering is an on-demand service that will allow you extend your on-prem data center to the Amazon cloud, which is running VMware Cloud Foundation on physical hardware in Amazons cloud data center. This means no converting of workloads in order to take advantage of a cloud architecture because this is running the same SDDC applications you are running today. VMware Cloud on AWS is ideal for customers looking to:

VMware Cloud on AWS is delivered, sold, and supported by VMware as an on-demand, scalable cloud service. This new model is the most flexible and agile model for future data centers. This will allow you to transform your business from hardware dictating where applications reside to applications driving the business in a hybrid cloud model and gaining the ability to easily migrate applications to where it makes most since in alignment with the business requirements and objectives. References:

2 Comments

Russ Kaufmann

12/7/2017 04:16:32 pm

Love it

Reply

7/23/2023 10:02:50 pm

An insightful read on how to future-proof your data center for the cloud, offering valuable strategies for staying ahead in the ever-evolving tech landscape.

Reply

Your comment will be posted after it is approved.

Leave a Reply. |

RecognitionCategories

All

Archives

April 2024

|

RSS Feed

RSS Feed