|

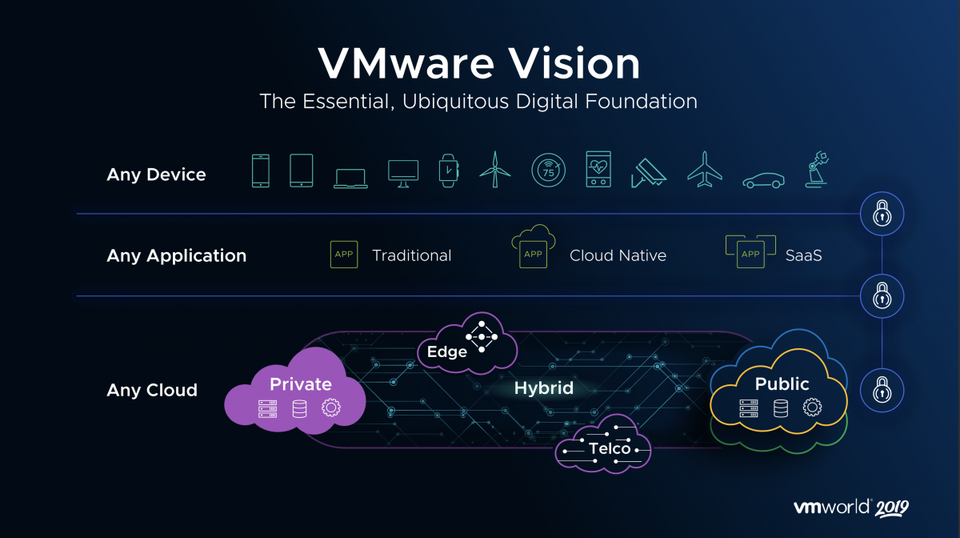

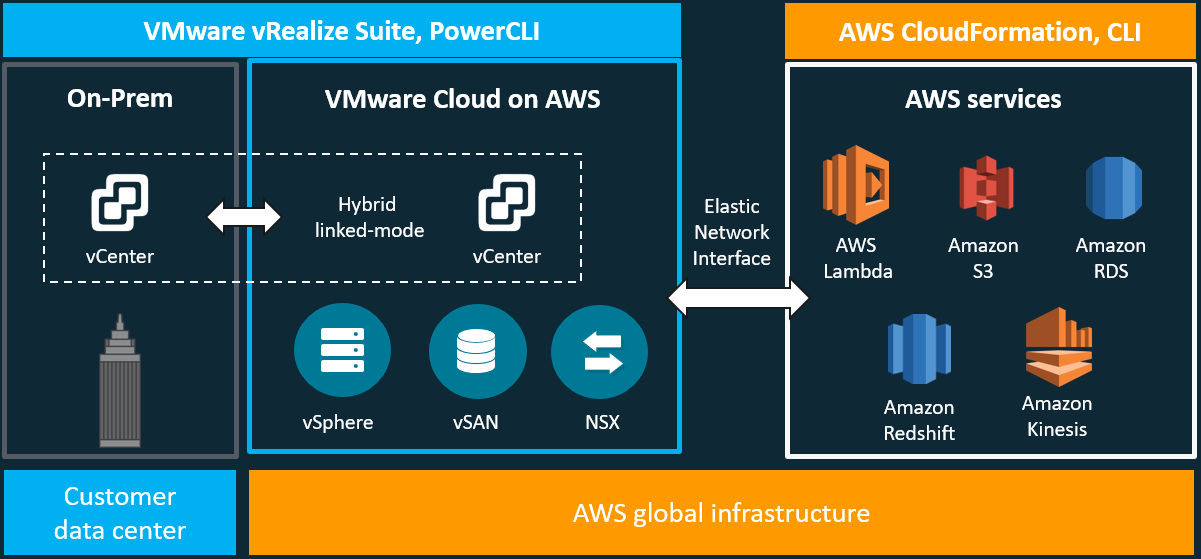

I wrote a blog about this subject before, which can be found here. The information contained in that blog is still relevant to this conversation and walks you through the challenges for traditional three-tier architecture and how the industry, specifically VMware, has addressed those challenges. In this blog, I will be updating the vision that VMware has laid out for the hybrid-cloud, which is comprised of VMware Cloud on AWS and VMware Cloud Foundations. To better understand this journey and how we have arrived at this vision of Any Device, Any Application, and Any Cloud, take a look back at the previous blog. Let's begin with an overview of VMware Cloud on AWS. Quick Overview of VMware Cloud on AWSVMware Cloud on AWS is a jointly engineered and integrated cloud offering developed by VMware and AWS. Through this hybrid-cloud service, organizations can deliver a stable and secure solution to migrate and extend their on-premises VMware vSphere-based environments to the AWS cloud running on bare metal Amazon Elastic Compute Cloud (EC2) infrastructure.

VMware Cloud on AWS has several use case buckets that most customers find themselves falling into some overlap. The first of these use cases is for organizations looking to migrate their on-premises vSphere-based workloads and to extend their capacities to the cloud with the data center extension use case. The next, is for organizations looking to modernize their recovery options, new disaster recovery implementations, or organizations looking to replace existing DR infrastructure. The last one that I will mention, is for organizations looking to evacuate their data centers or consolidate data centers through cloud-migrations. This is great for organizations looking at data center refreshes. VMware Cloud on AWS is delivered, sold, and supported by VMware and its partners like Sirius Computer Solutions, a Managed Service Partner. Available in many AWS Regions which can be found here and growing. Through this offering organizations can build their hybrid solutions based on the same underlying infrastructure that runs on VMware Cloud on AWS, VMware Cloud Foundations.

0 Comments

Day 1 began with the general session, where VMware Executives presented to the partner community and reinforced the importance of the partner as the unsung heroes helping to drive the VMware business and most importantly driving value for their customers.

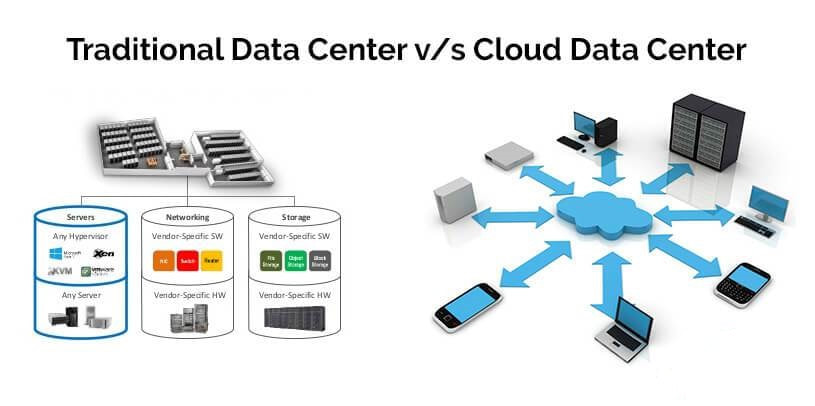

The movement toward a hybrid cloud, software defined data center, has been on-going for years now. We have seen the virtualization of compute, storage, and now networking. In this blog, I will be discussing this journey: where we started, where we are going, and why you want to be on this journey. Traditional data center models are still very prevalent and accepted by organizations as the defacto model for their data center(s). If you have ever managed a traditional data center model, then you know the surmounting challenges we face within this model.

What comprises the traditional data center model? A traditional data center model can be described as heterogeneous compute, physical storage, and networking managed by disperse teams all with a very unique set of skills. Applications are typically hosted in their own physical storage, networking, and compute. All these entities-physical storage, networking, and compute- increase with the growth in size and number of applications. With growth, complexity increases, agility decreases, security complexities increase, and assurance of a predictable and repeatable production environment, decrease. Characterizations of a Traditional Data Center:

Challenges around supporting these complex infrastructures can include things like slow time to resolution when an issue arises due to the complexities of a multi-vendor solution. Think about the last time you had to troubleshoot a production issue. In a typical scenario, you are opening multiple tickets with multiple vendors. A ticket with the network vendor, a ticket with the hyper-visor vendor, a ticket with the compute vendor, a ticket with the storage vendor, and so on and so on. Typically, all pointing fingers at each other when we all know that fault always lies with the database admins. The challenges aren't just around the complexities of design, day to day support, or administration, but also include challenges around lifecycle management. When it comes to lifecycle management, we are looking at the complexities around publishing updates and patches. If you are doing your due diligence, then you are gathering and documenting all the firmware, bios, and software from all the hardware involved for the update/patch and comparing that information against Hardware Compatibility Lists and Interoperability Lists to ensure that they are in a supported matrix. If not, then you have to update before going any further. This can be extremely time consuming and we are typically tasked with testing in a lab that doesn't match our production environment(s) ensuring we don't bring any production systems down during the maintenance window. |

RecognitionCategories

All

Archives

April 2024

|

RSS Feed

RSS Feed